Prerequisition

安装Go

$ echo "export GOPATH=/home/parallels/go" > .zshrc

$ echo "export GOROOT=/usr/lib/go-1.14" > .zshrc

安装Java

安装Zookeeper

安装 autoconf

$ sudo apt-get install autoconf

手动编译

$ sudo mkdir -p $GOPATH/src/github.com/CodisLabs

$ cd $_ && git clone https://github.com/CodisLabs/codis.git -b release3.2

$ echo "export PATH=$GOPATH/src/github.com/CodisLabs/codis/bin:$PATH" > ~/.zshrc

$ sudo make

Binary

$ curl -OL https://github.com/CodisLabs/codis/releases/download/3.2.2/codis3.2.2-go1.8.5-linux.zip

$ unzip codis3.2.2-go1.8.5-linux.zip

$ cd codis3.2.2-go1.8.5-linux

启动

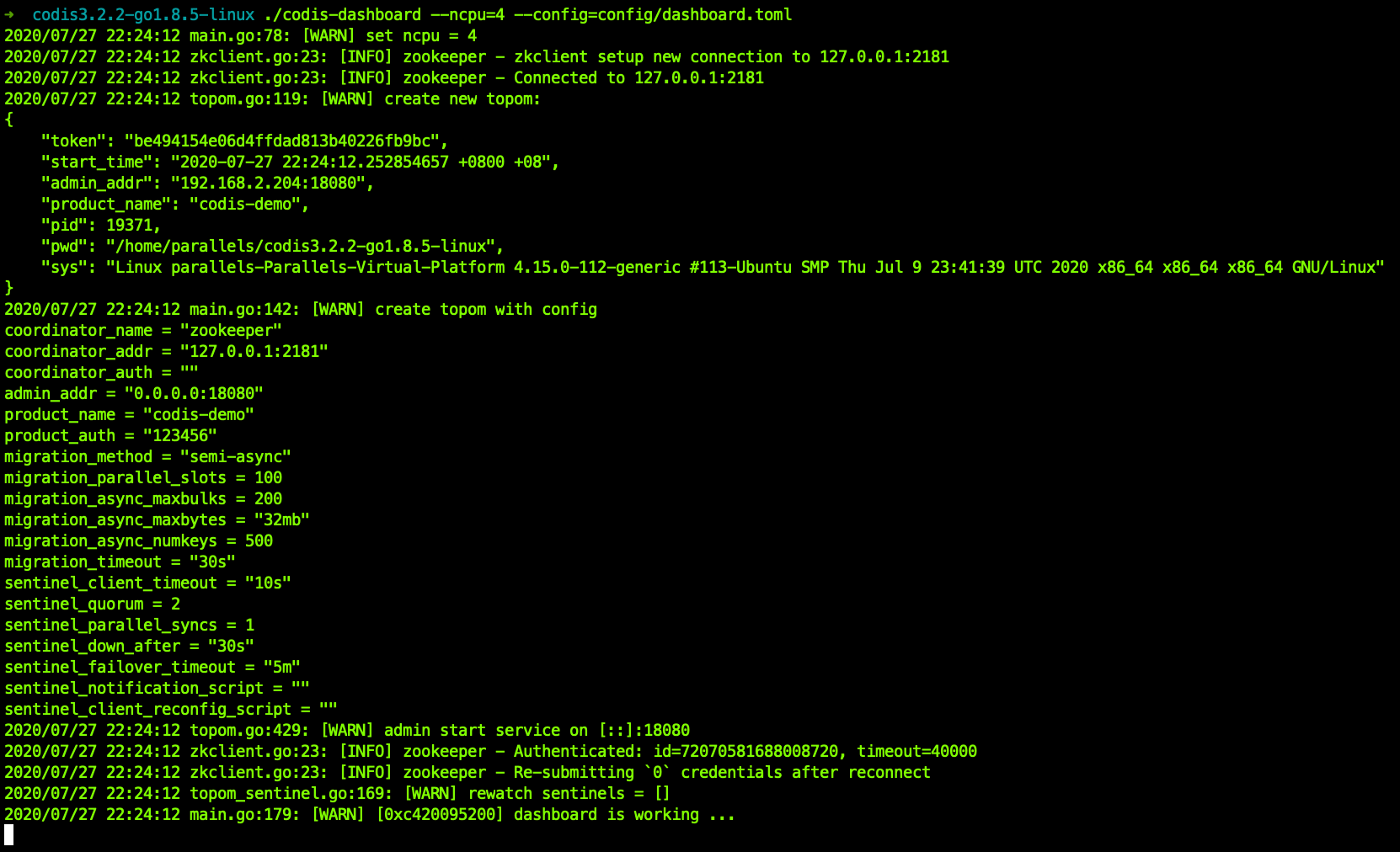

启动 codis-dashboard

$ # vim config/dashboard.toml

coordinator_name = "zookeeper"

# 这里填你的ZooKeeper的地址

coordinator_addr = "127.0.0.1:2181"

product_name = "codis-demo"

启动

$ nohup ./codis-dashboard --ncpu=4 --config=config/dashboard.toml --log=logs/dashboard.log --log-level=WARN &

# 如果开启多个shell tab,则直接以实时查看Log

$ ./codis-dashboard --ncpu=4 --config=config/dashboard.toml

默认工作在18080端口。

$ ./codis-dashboard -h

Usage:

codis-dashboard [--ncpu=N] [--config=CONF] [--log=FILE] [--log-level=LEVEL] [--host-admin=ADDR] [--pidfile=FILE] [--zookeeper=ADDR|--etcd=ADDR|--filesystem=ROOT] [--product_name=NAME] [--product_auth=AUTH] [--remove-lock]

codis-dashboard --default-config

codis-dashboard --version

Options:

--ncpu=N set runtime.GOMAXPROCS to N, default is runtime.NumCPU().

-c CONF, --config=CONF run with the specific configuration.

-l FILE, --log=FILE set path/name of daliy rotated log file.

--log-level=LEVEL set the log-level, should be INFO,WARN,DEBUG or ERROR, default is INFO.

默认配置文件

$ ./codis-dashboard --default-config | tee dashboard.toml

##################################################

# #

# Codis-Dashboard #

# #

##################################################

# Set Coordinator, only accept "zookeeper" & "etcd" & "filesystem".

# for zookeeper/etcd, coorinator_auth accept "user:password"

# Quick Start

coordinator_name = "filesystem"

coordinator_addr = "/tmp/codis"

#coordinator_name = "zookeeper"

#coordinator_addr = "127.0.0.1:2181"

#coordinator_auth = ""

# Set Codis Product Name/Auth.

product_name = "codis-demo"

product_auth = ""

# Set bind address for admin(rpc), tcp only.

admin_addr = "0.0.0.0:18080"

# Set arguments for data migration (only accept 'sync' & 'semi-async').

migration_method = "semi-async"

migration_parallel_slots = 100

migration_async_maxbulks = 200

migration_async_maxbytes = "32mb"

migration_async_numkeys = 500

migration_timeout = "30s"

# Set configs for redis sentinel.

sentinel_client_timeout = "10s"

sentinel_quorum = 2

sentinel_parallel_syncs = 1

sentinel_down_after = "30s"

sentinel_failover_timeout = "5m"

sentinel_notification_script = ""

sentinel_client_reconfig_script = ""

| 参数 | 说明 |

|---|---|

| coordinator_name | 外部存储类型,接受 zookeeper/etcd |

| coordinator_addr | 外部存储地址 |

| product_name | 集群名称,满足正则 \w[\w\.\-]* |

| product_auth | 集群密码,默认为空 |

| admin_addr | RESTful API 端口 |

启动 codis-proxy

codis-proxy 配置

# vim config/proxy.toml

product_name = "codis-demo"

jodis_name = "zookeeper"

jodis_addr = "127.0.0.1:2181"

$ nohup ./codis-proxy --ncpu=4 --config=config/proxy.toml --log=logs/proxy.log --log-level=WARN &

# 如果开启多个shell tab,则直接以实时查看Log

$ ./codis-proxy --ncpu=4 --config=config/proxy.toml

2020/07/27 22:36:28 main.go:104: [WARN] set ncpu = 4, max-ncpu = 8

2020/07/27 22:36:28 zkclient.go:23: [INFO] zookeeper - zkclient setup new connection to 127.0.0.1:2181

2020/07/27 22:36:28 proxy.go:91: [WARN] [0xc4200a6e70] create new proxy:

{

"token": "620204800e7d3a781457f23ca974dfcb",

"start_time": "2020-07-27 22:36:28.056420238 +0800 +08",

"admin_addr": "192.168.2.204:11080",

"proto_type": "tcp4",

"proxy_addr": "192.168.2.204:19000",

"jodis_path": "/jodis/codis-demo/proxy-620204800e7d3a781457f23ca974dfcb",

"product_name": "codis-demo",

"pid": 21563,

"pwd": "/home/parallels/codis3.2.2-go1.8.5-linux",

"sys": "Linux parallels-Parallels-Virtual-Platform 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux",

"hostname": "parallels-Parallels-Virtual-Platform",

"datacenter": ""

}

2020/07/27 22:36:28 zkclient.go:23: [INFO] zookeeper - Connected to 127.0.0.1:2181

2020/07/27 22:36:28 proxy.go:378: [WARN] [0xc4200a6e70] admin start service on [::]:11080

2020/07/27 22:36:28 proxy.go:402: [WARN] [0xc4200a6e70] proxy start service on 0.0.0.0:19000

2020/07/27 22:36:28 main.go:193: [WARN] create proxy with config

...

2020/07/27 22:36:28 main.go:229: [WARN] [0xc4200a6e70] proxy waiting online ...

2020/07/27 22:36:28 zkclient.go:23: [INFO] zookeeper - Authenticated: id=72070581688008724, timeout=20000

2020/07/27 22:36:28 zkclient.go:23: [INFO] zookeeper - Re-submitting `0` credentials after reconnect

2020/07/27 22:36:29 main.go:229: [WARN] [0xc4200a6e70] proxy waiting online ...

2020/07/27 22:36:30 main.go:229: [WARN] [0xc4200a6e70] proxy waiting online ...

2020/07/27 22:36:31 main.go:229: [WARN] [0xc4200a6e70] proxy waiting online ...

2020/07/27 22:36:32 main.go:229: [WARN] [0xc4200a6e70] proxy waiting online ...

我们会发现proxy一直处于waiting online状态,这是因为没有将proxy 与 dashboard关联起来(而将proxy 与 dashboard关联需要我们显式地去操作)。

端口使用

一个codis-proxy 会使用两个端口,一个用于被 redis-cli直接连接(proxy_addr,默认为19000),一个用于管理这个proxy(admin_addr,默认为11080)

$ redis-cli -h 192.168.2.204 -p 19000

192.168.2.204:19000>

$ lsof -i -P | grep -i "listen" | grep "codis-proxy"

codis-pro 20014 parallels 3u IPv4 2246306 0t0 TCP *:19000 (LISTEN)

codis-pro 20014 parallels 5u IPv6 2246307 0t0 TCP *:11080 (LISTEN)

帮助

$ ./codis-proxy -h

Usage:

codis-proxy [--ncpu=N [--max-ncpu=MAX]] [--config=CONF] [--log=FILE] [--log-level=LEVEL] [--host-admin=ADDR] [--host-proxy=ADDR] [--dashboard=ADDR|--zookeeper=ADDR [--zookeeper-auth=USR:PWD]|--etcd=ADDR [--etcd-auth=USR:PWD]|--filesystem=ROOT|--fillslots=FILE] [--ulimit=NLIMIT] [--pidfile=FILE] [--product_name=NAME] [--product_auth=AUTH] [--session_auth=AUTH]

codis-proxy --default-config

codis-proxy --version

Options:

--ncpu=N set runtime.GOMAXPROCS to N, default is runtime.NumCPU().

-c CONF, --config=CONF run with the specific configuration.

-l FILE, --log=FILE set path/name of daliy rotated log file.

--log-level=LEVEL set the log-level, should be INFO,WARN,DEBUG or ERROR, default is INFO.

--ulimit=NLIMIT run 'ulimit -n' to check the maximum number of open file descriptors.

默认配置文件 proxy.toml

$ ./codis-proxy --default-config | tee proxy.toml

##################################################

# #

# Codis-Proxy #

# #

##################################################

# Set Codis Product Name/Auth.

product_name = "codis-demo"

product_auth = ""

# Set auth for client session

# 1. product_auth is used for auth validation among codis-dashboard,

# codis-proxy and codis-server.

# 2. session_auth is different from product_auth, it requires clients

# to issue AUTH <PASSWORD> before processing any other commands.

session_auth = ""

# Set bind address for admin(rpc), tcp only.

admin_addr = "0.0.0.0:11080"

# Set bind address for proxy, proto_type can be "tcp", "tcp4", "tcp6", "unix" or "unixpacket".

proto_type = "tcp4"

proxy_addr = "0.0.0.0:19000"

# Set jodis address & session timeout

# 1. jodis_name is short for jodis_coordinator_name, only accept "zookeeper" & "etcd".

# 2. jodis_addr is short for jodis_coordinator_addr

# 3. jodis_auth is short for jodis_coordinator_auth, for zookeeper/etcd, "user:password" is accepted.

# 4. proxy will be registered as node:

# if jodis_compatible = true (not suggested):

# /zk/codis/db_{PRODUCT_NAME}/proxy-{HASHID} (compatible with Codis2.0)

# or else

# /jodis/{PRODUCT_NAME}/proxy-{HASHID}

jodis_name = ""

jodis_addr = ""

jodis_auth = ""

jodis_timeout = "20s"

jodis_compatible = false

# Set datacenter of proxy.

proxy_datacenter = ""

# Set max number of alive sessions.

proxy_max_clients = 1000

# Set max offheap memory size. (0 to disable)

proxy_max_offheap_size = "1024mb"

# Set heap placeholder to reduce GC frequency.

proxy_heap_placeholder = "256mb"

# Proxy will ping backend redis (and clear 'MASTERDOWN' state) in a predefined interval. (0 to disable)

backend_ping_period = "5s"

# Set backend recv buffer size & timeout.

backend_recv_bufsize = "128kb"

backend_recv_timeout = "30s"

# Set backend send buffer & timeout.

backend_send_bufsize = "128kb"

backend_send_timeout = "30s"

# Set backend pipeline buffer size.

backend_max_pipeline = 20480

# Set backend never read replica groups, default is false

backend_primary_only = false

# Set backend parallel connections per server

backend_primary_parallel = 1

backend_replica_parallel = 1

# Set backend tcp keepalive period. (0 to disable)

backend_keepalive_period = "75s"

# Set number of databases of backend.

backend_number_databases = 16

# If there is no request from client for a long time, the connection will be closed. (0 to disable)

# Set session recv buffer size & timeout.

session_recv_bufsize = "128kb"

session_recv_timeout = "30m"

# Set session send buffer size & timeout.

session_send_bufsize = "64kb"

session_send_timeout = "30s"

# Make sure this is higher than the max number of requests for each pipeline request, or your client may be blocked.

# Set session pipeline buffer size.

session_max_pipeline = 10000

# Set session tcp keepalive period. (0 to disable)

session_keepalive_period = "75s"

# Set session to be sensitive to failures. Default is false, instead of closing socket, proxy will send an error response to client.

session_break_on_failure = false

# Set metrics server (such as http://localhost:28000), proxy will report json formatted metrics to specified server in a predefined period.

metrics_report_server = ""

metrics_report_period = "1s"

# Set influxdb server (such as http://localhost:8086), proxy will report metrics to influxdb.

metrics_report_influxdb_server = ""

metrics_report_influxdb_period = "1s"

metrics_report_influxdb_username = ""

metrics_report_influxdb_password = ""

metrics_report_influxdb_database = ""

# Set statsd server (such as localhost:8125), proxy will report metrics to statsd.

metrics_report_statsd_server = ""

metrics_report_statsd_period = "1s"

metrics_report_statsd_prefix = ""

| 参数 | 说明 |

|---|---|

| product_name | 集群名称,参考 dashboard 参数说明 |

| product_auth | 集群密码,默认为空 |

| admin_addr | RESTful API 端口 |

| proto_type | Redis 端口类型,接受 tcp/tcp4/tcp6/unix/unixpacket |

| proxy_addr | Redis 端口地址或者路径 |

| jodis_addr | Jodis 注册 zookeeper 地址 |

| jodis_timeout | Jodis 注册 session timeout 时间,单位 second |

| jodis_compatible | Jodis 注册 zookeeper 的路径 |

| backend_ping_period | 与 codis-server 探活周期,单位 second,0 表示禁止 |

| session_max_timeout | 与 client 连接最大读超时,单位 second,0 表示禁止 |

| session_max_bufsize | 与 client 连接读写缓冲区大小,单位 byte |

| session_max_pipeline | 与 client 连接最大的 pipeline 大小 |

| session_keepalive_period | 与 client 的 tcp keepalive 周期,仅 tcp 有效,0 表示禁止 |

添加 Proxy方法

codis-proxy 启动后,处于 waiting 状态,监听 proxy_addr 地址,但是不会 accept 连接,添加到集群并完成集群状态的同步,才能改变状态为 online。添加的方法有以下两种:

- 通过 codis-fe 添加:通过

Add Proxy按钮,将admin_addr加入到集群中; - 通过 codis-admin 命令行工具添加,方法如下:

$ ./bin/codis-admin --dashboard=127.0.0.1:18080 --create-proxy -x 127.0.0.1:11080

其中 127.0.0.1:18080 以及 127.0.0.1:11080 分别为 dashboard 和 proxy 的 admin_addr 地址;

在本Demo中,我们在Codis FE启动后,再对proxy进行处理。

启动 codis-server

codis-server 配置

# vim config/redis.conf

bind 0.0.0.0

port 6379

daemonize yes

pidfile /var/run/redis-6379.pid

dir /data/redis-data/redis-6379/

$ cd /data

$ sudo mkdir redis-data

$ ./codis-server ./config/redis.conf --log=logs/server.log --log-level=WARN

# 只指定端口

$ ./codis-server --port 6380

- 启动 ./bin/codis-server,与启动普通 redis 的方法一致(这意味着)。

- 启动完成后,可以通过 codis-fe 提供的界面或者 codis-admin 命令行工具添加到集群中。

帮助

$ ./codis-server -h

Usage: ./redis-server [/path/to/redis.conf] [options]

./redis-server - (read config from stdin)

./redis-server -v or --version

./redis-server -h or --help

./redis-server --test-memory <megabytes>

Examples:

./redis-server (run the server with default conf)

./redis-server /etc/redis/6379.conf

./redis-server --port 7777

./redis-server --port 7777 --slaveof 127.0.0.1 8888

./redis-server /etc/myredis.conf --loglevel verbose

Sentinel mode:

./redis-server /etc/sentinel.conf --sentinel

启动 codis-fe

codis-fe 启动

$ nohup ./codis-fe --ncpu=4 --log=logs/fe.log --log-level=WARN --zookeeper=127.0.0.1:2181 --listen=0.0.0.0:8081 &

启动参数说明

$ ./bin/codis-fe -h

Usage:

codis-fe [--ncpu=N] [--log=FILE] [--log-level=LEVEL] [--assets-dir=PATH] (--dashboard-list=FILE|--zookeeper=ADDR|--etcd=ADDR|--filesystem=ROOT) --listen=ADDR

codis-fe --version

Options:

--ncpu=N 最大使用 CPU 个数

-d LIST, --dashboard-list=LIST 配置文件,能够自动刷新

-l FILE, --log=FILE 设置 log 输出文件

--log-level=LEVEL 设置 log 输出等级:INFO,WARN,DEBUG,ERROR;默认INFO,推荐WARN

--listen=ADDR HTTP 服务端口

配置文件 codis.json 可以手动编辑,也可以通过 codis-admin 从外部存储中拉取,例如:

$ ./bin/codis-admin --dashboard-list --zookeeper=127.0.0.1:2181 | tee codis.json

[

{

"name": "codis-demo",

"dashboard": "127.0.0.1:18080"

},

{

"name": "codis-demo2",

"dashboard": "127.0.0.1:28080"

}

]

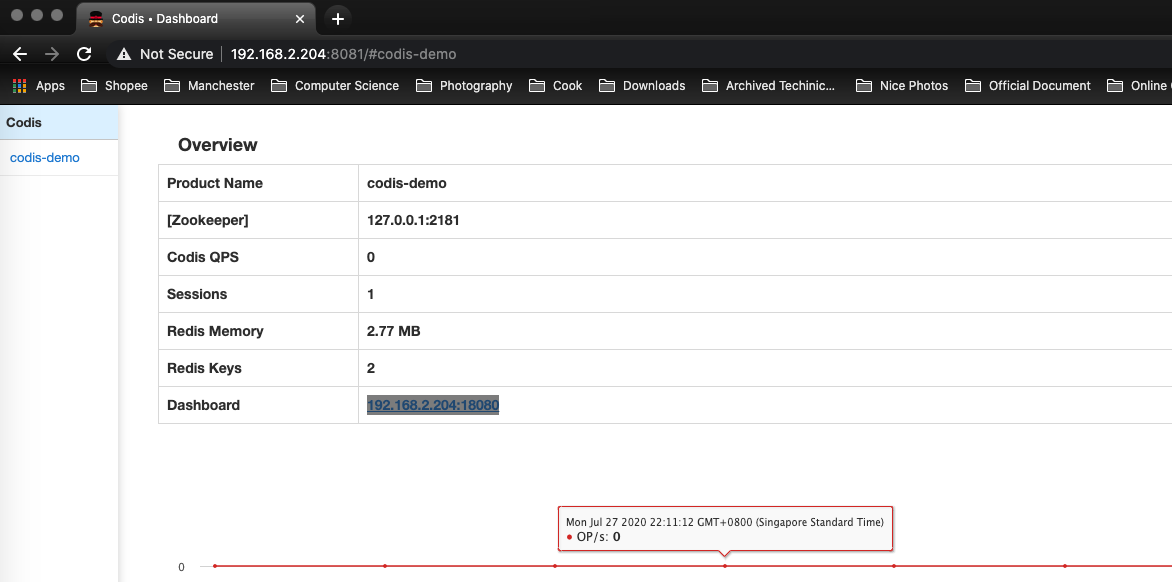

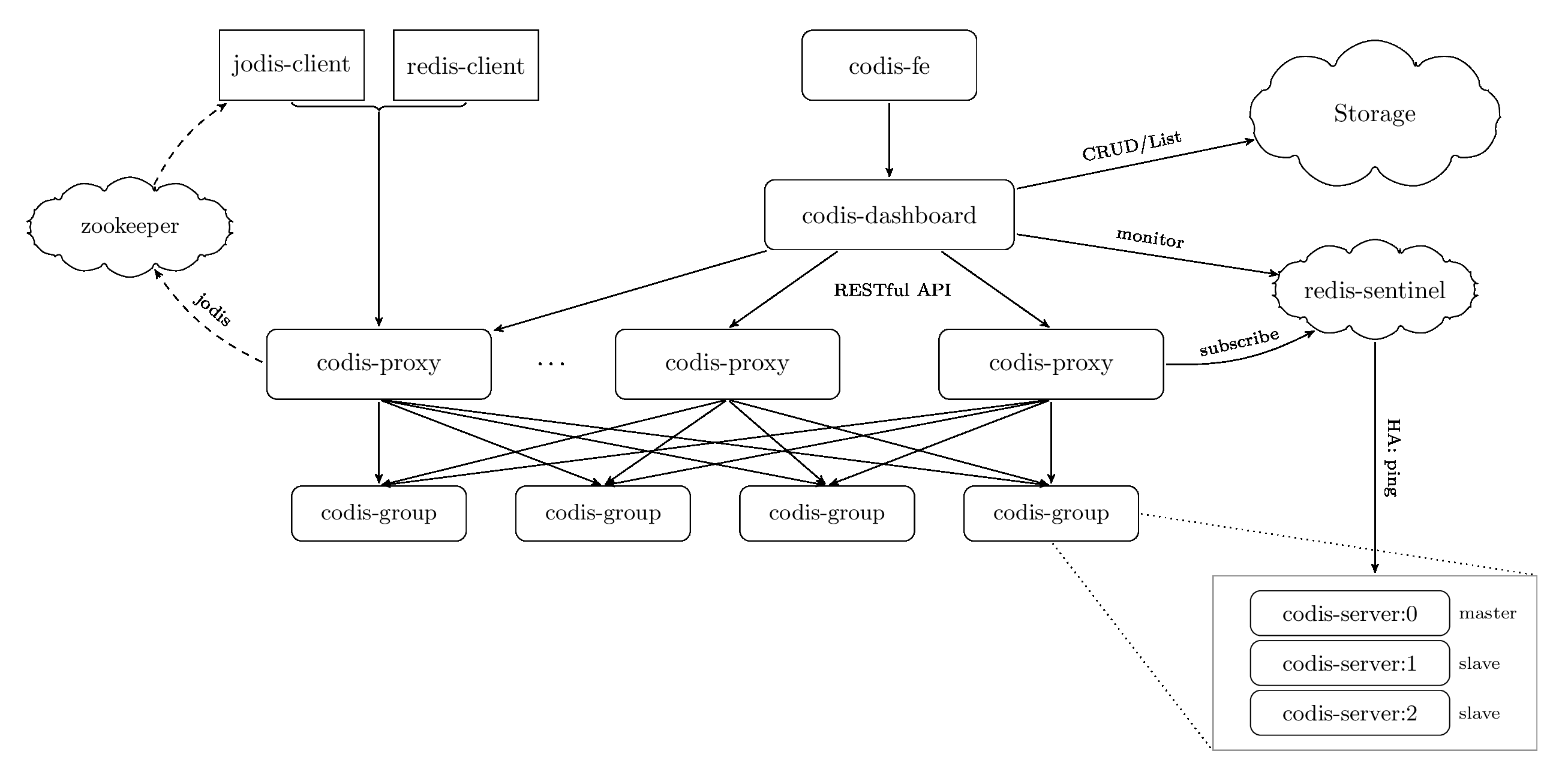

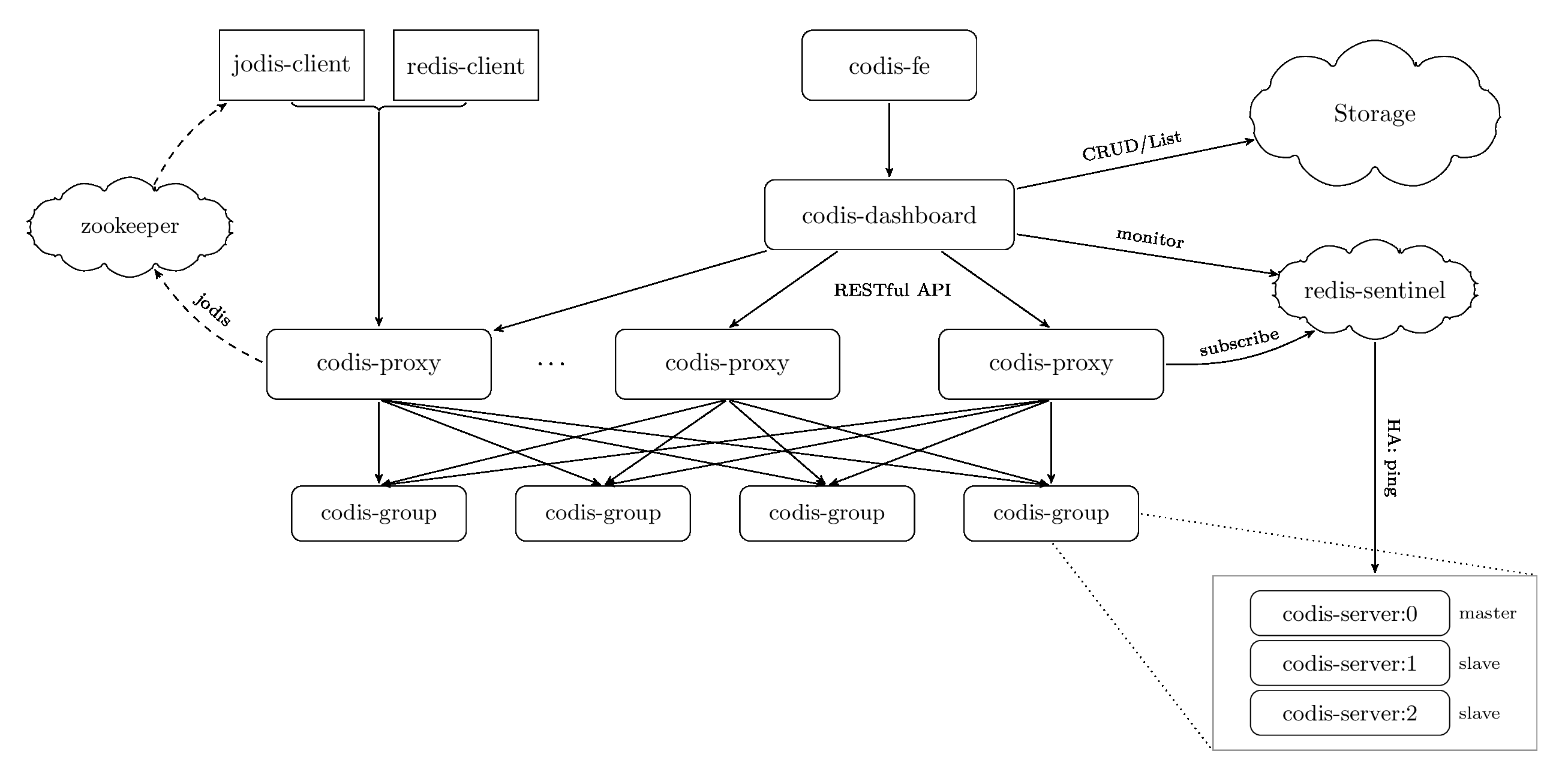

访问 http://192.168.2.204:8081/ ,选择 codis-demo,即我们的 product name(这其实是因为一个 Codis FE 可以管理多个 Codis Dashboard )

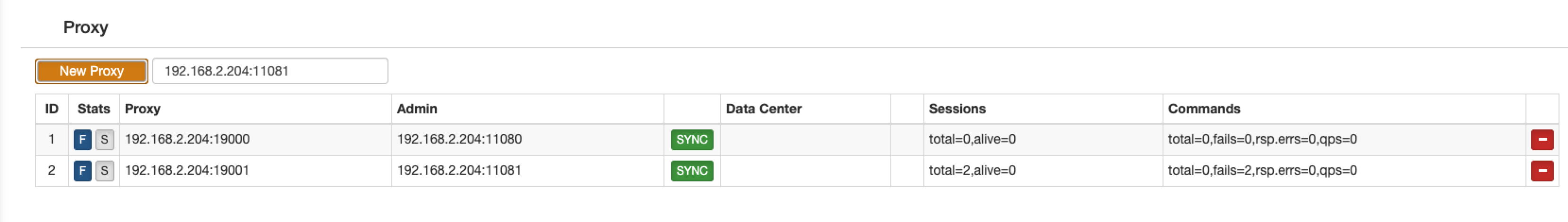

添加 proxy

通过web浏览器访问集群管理页面(即 codis-fe,http://192.168.2.204:8081/ ),在 Proxy 栏我们并不能看到任何codis-proxy。

这其实是由于前面说的,即:

codis-proxy 启动后,处于

waiting状态,监听proxy_addr地址,但是不会accept连接。只要添加到集群并完成集群状态的同步,才能改变状态为

online。添加的方法有以下两种:

- 通过 codis-fe 添加:通过

Add Proxy按钮,将admin_addr加入到集群中;- 通过 codis-admin 命令行工具添加,方法如下:

$ ./bin/codis-admin --dashboard=127.0.0.1:18080 --create-proxy -x 127.0.0.1:11080其中

127.0.0.1:18080以及127.0.0.1:11080分别为 dashboard 和 proxy 的admin_addr地址;

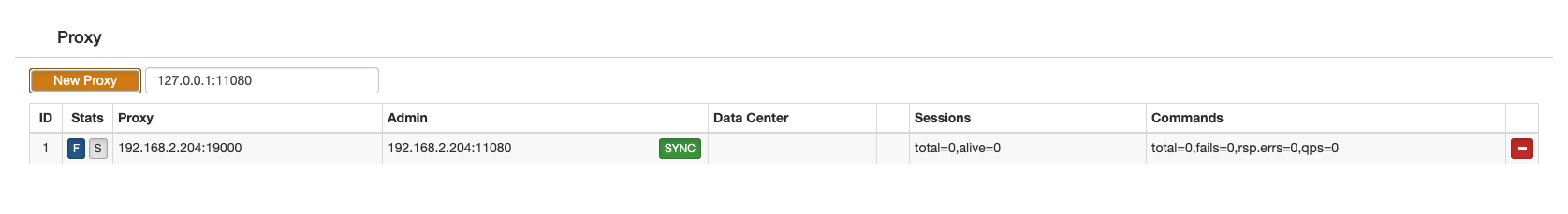

在添加完成后,就可以看到这个proxy的状态了:

同时,在codis-proxy的log中,也能看到对应的变化

2020/07/27 22:53:17 main.go:233: [WARN] [0xc4200a9340] proxy is working ...

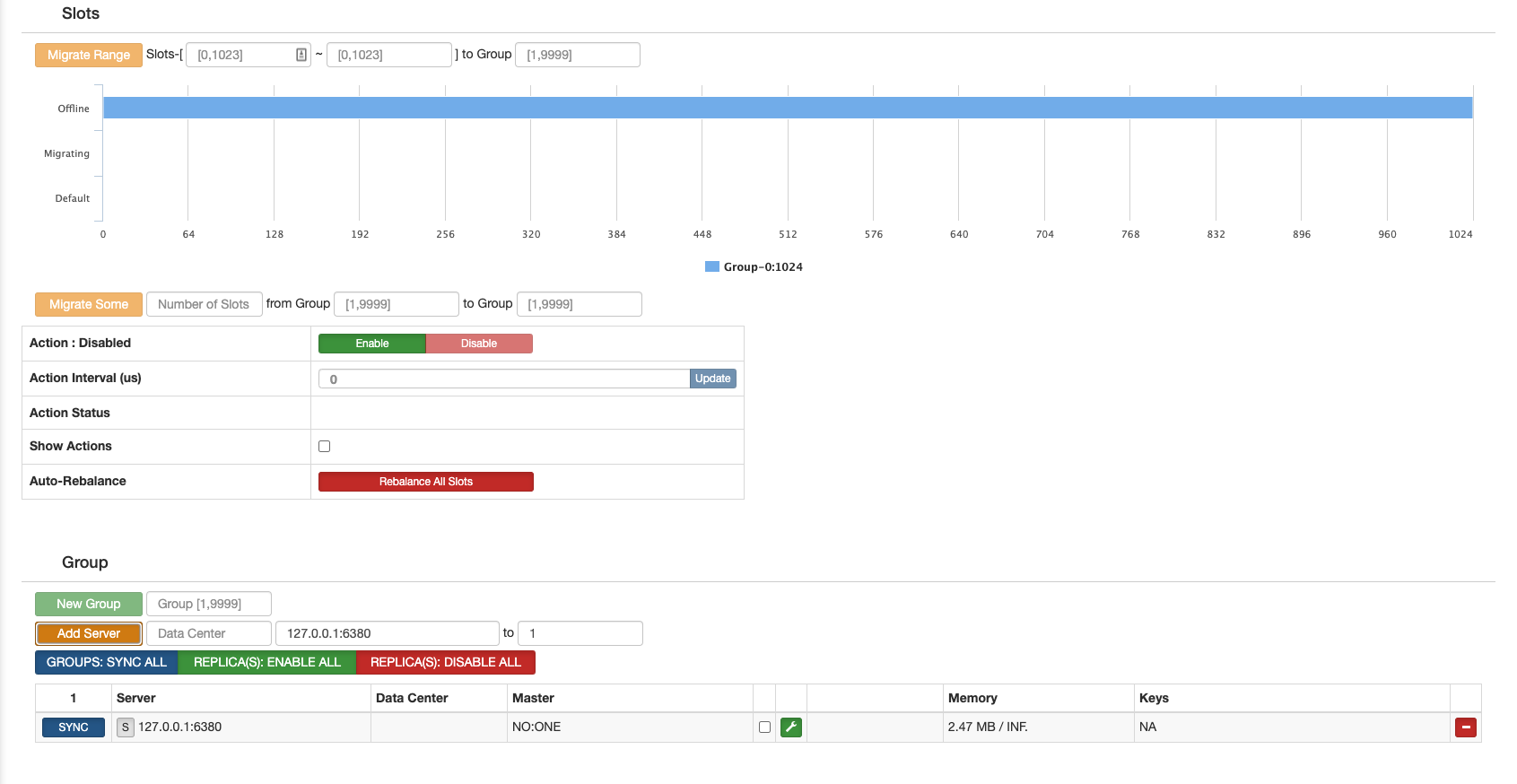

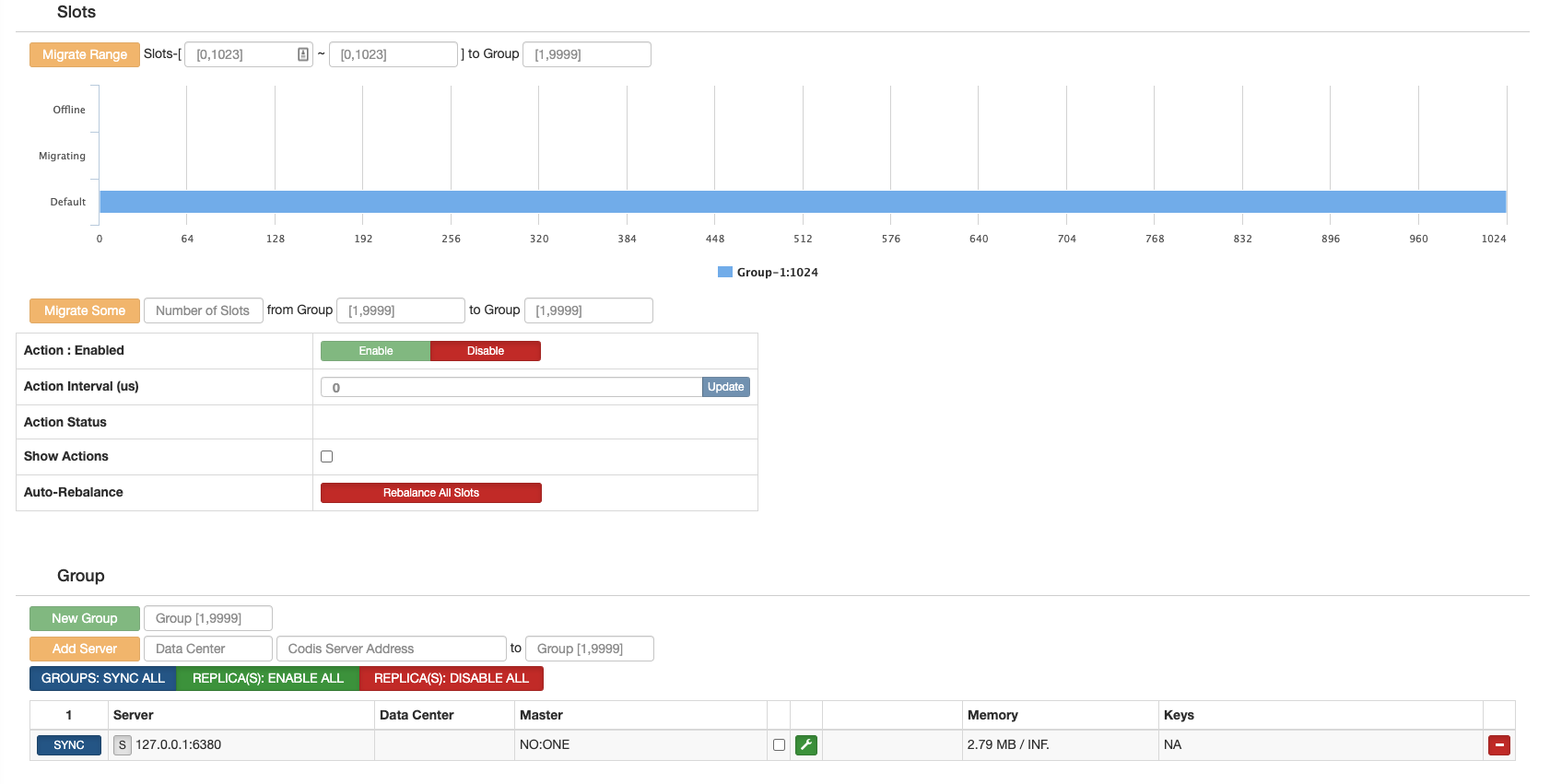

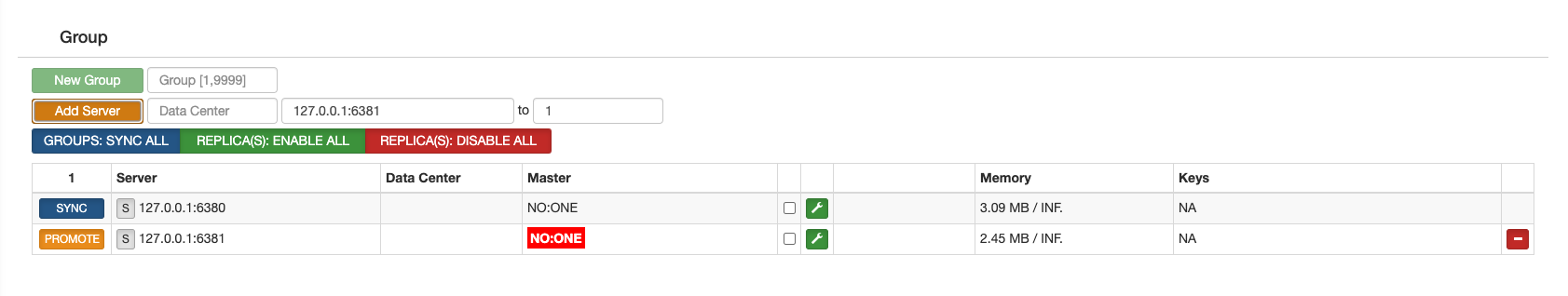

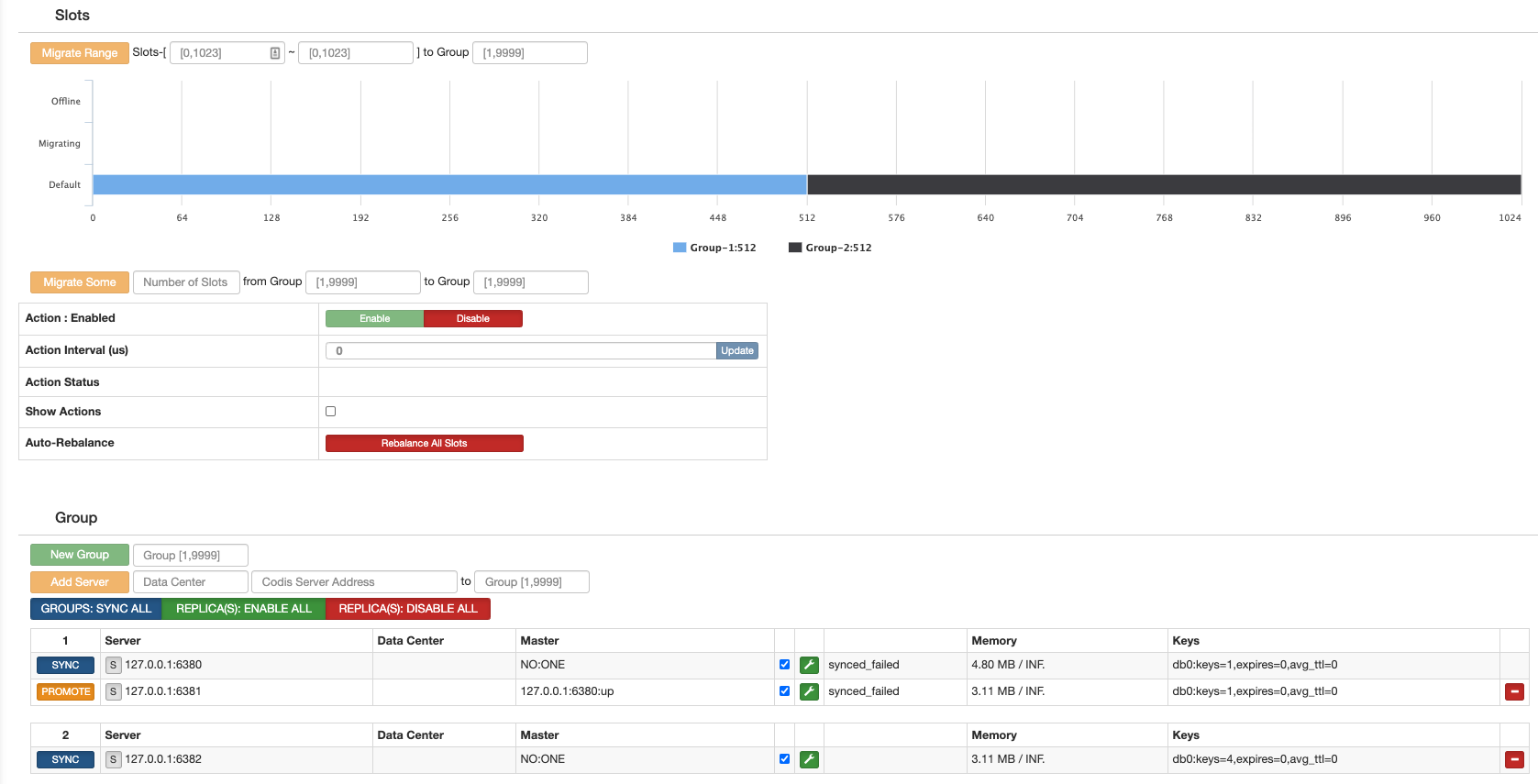

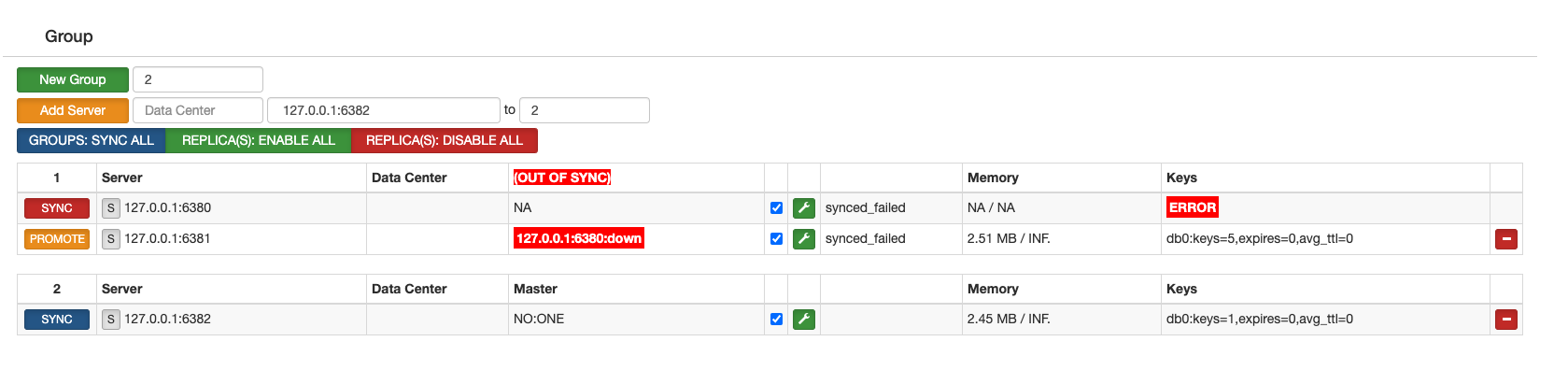

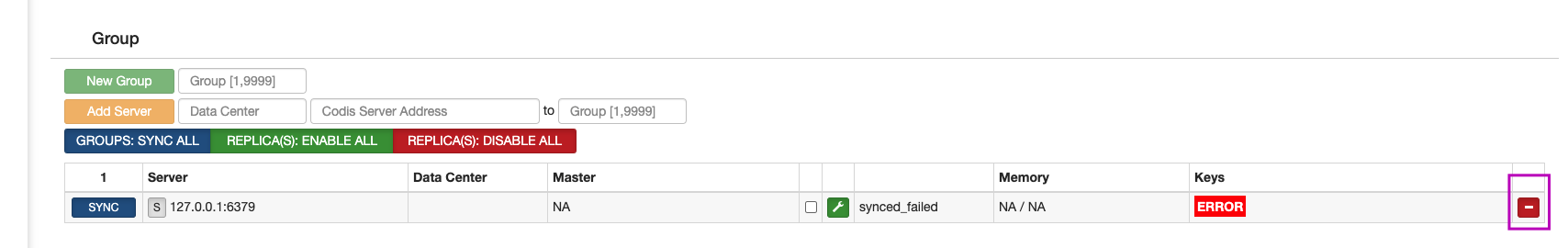

添加 codis-group (即codis-server)

Proxy已经设置成功了,但是 Group 栏为空,因为我们启动的 codis-server 并未加入到集群。

GROUP 输入 1,Add Server 输入我们刚刚启动的 codis-server 地址(127.0.0.1:6380),添加到我们刚新建的 Group,然后再点击 Add Server 按钮即可,如上图所示。

这样以后,整个cluster只有一个Group(即codis-group),即 Group 1。

当然,我们可以将更多个codis-server添加到cluster中,并将这些 codis-server 添加到不同的group中,这就是真正意义上的cluster了。

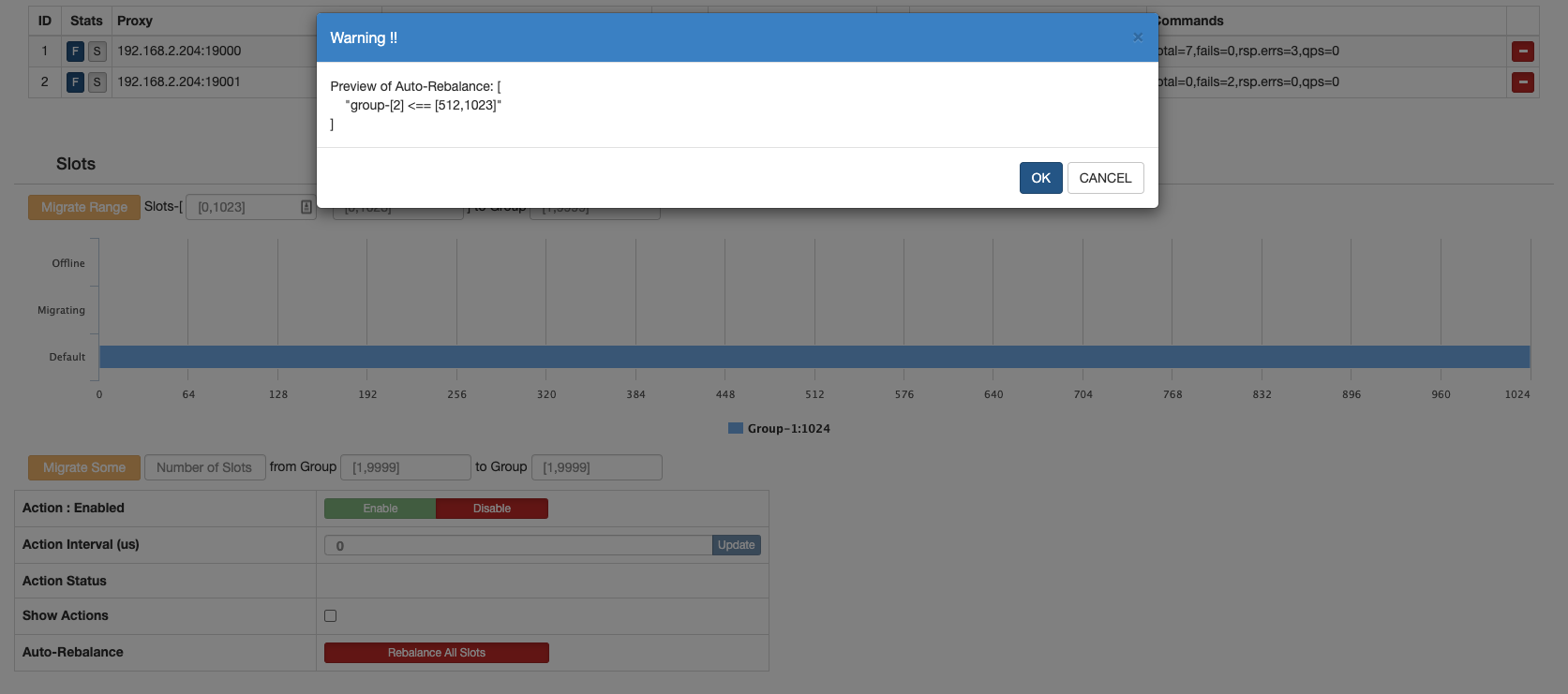

通过codis-fe初始化slot

新增的集群 slot 状态是 offline,因此我们需要对它进行初始化(将 1024 个 slot 分配到各个 group),而初始化最快的方法可通过 fe 提供的 Rebalance All Slots 按钮来做,如下图所示,点击此按钮,我们即快速完成了一个集群的搭建。

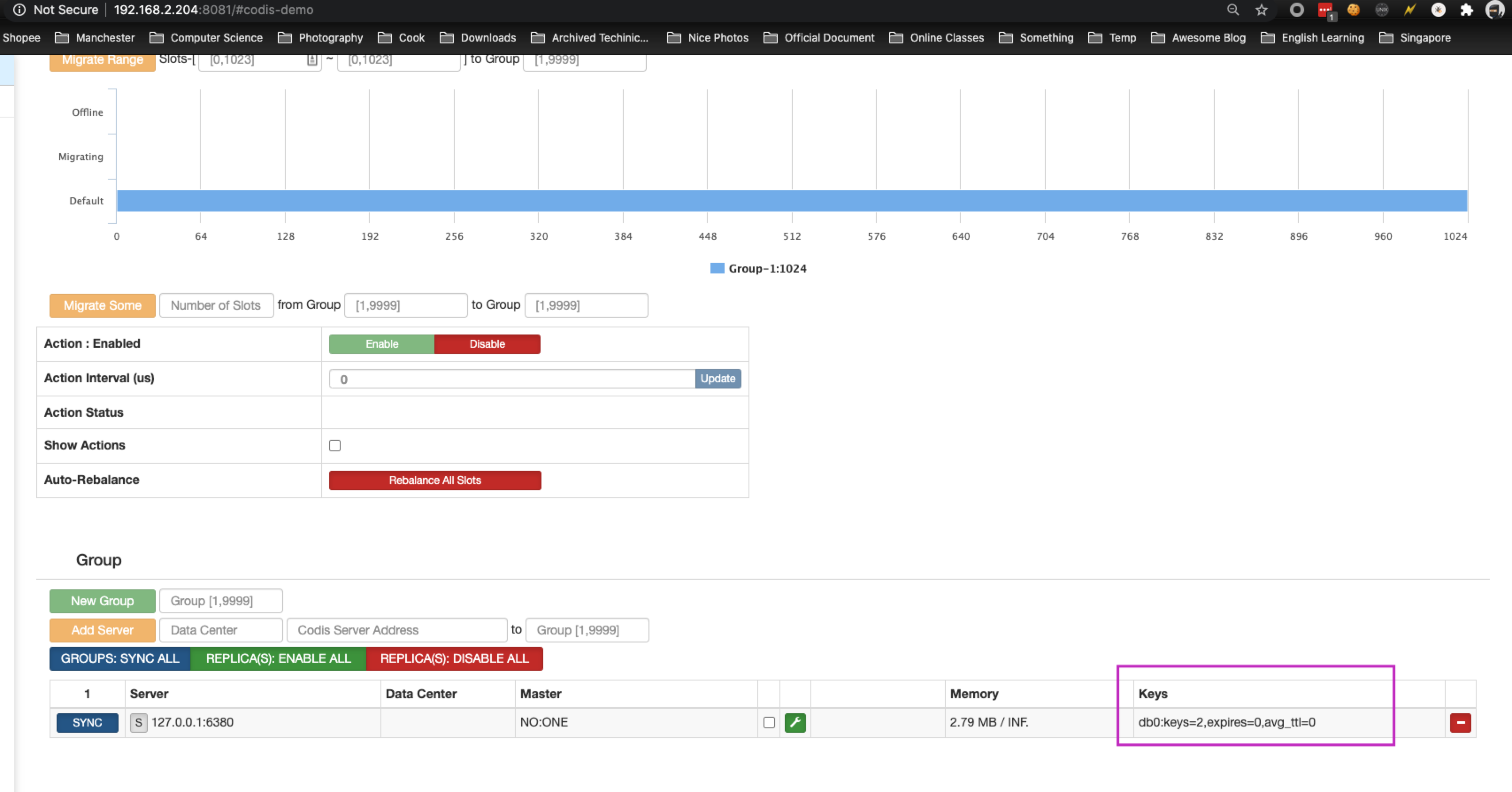

连接到codis-proxy

$ redis-cli -h 192.168.2.204 -p 19000

192.168.2.204:19000> set 1 a

OK

192.168.2.204:19000> set 2 b

OK

当我们进行set 时,就能看到keys的变化:

扩展

添加codis-proxy

$ cp config/proxy.toml config/proxy2.toml

$ vim config/proxy2.toml

product_name = "codis-demo"

jodis_name = "zookeeper"

jodis_addr = "127.0.0.1:2181"

admin_addr = "0.0.0.0:11081"

proxy_addr = "0.0.0.0:19001"

$ ./codis-proxy --ncpu=4 --config=config/proxy2.toml

...

2020/07/28 20:49:37 main.go:229: [WARN] [0xc42034c2c0] proxy waiting online ...

启动一个新的codis-proxy,添加其到当前 cluster中:

添加codis-server 作为一个已经存在的codis-group 中的slave节点

$ ./codis-server --port 6381

...

添加该 codis-server 到 Group 1(这也是 codis-server 6380 位于的 Group)中:

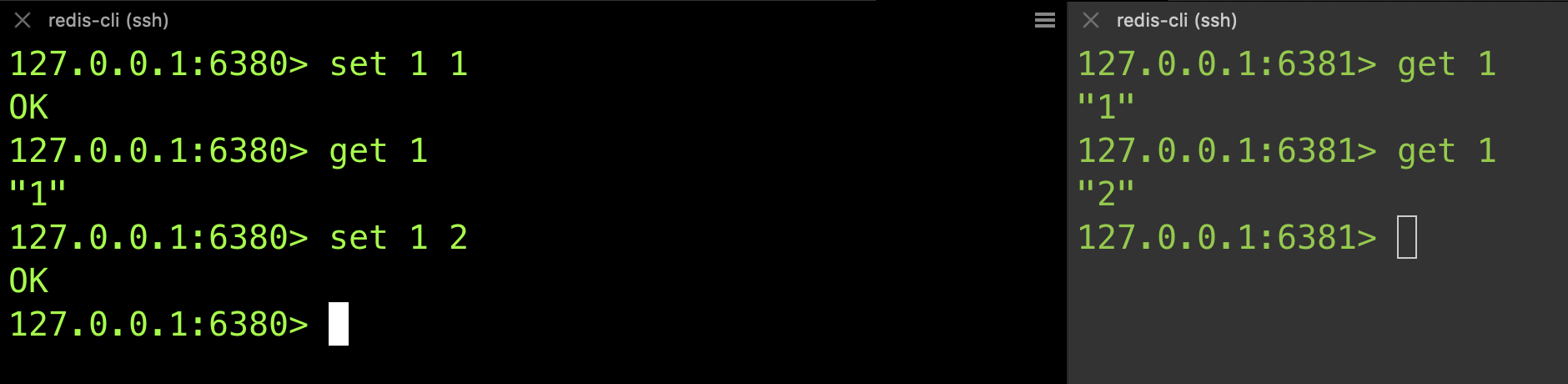

点击 127.0.0.1:6381 那一行对应的小扳手,以将数据从 127.0.0.1:6380 复制到 127.0.0.1:6381,即127.0.0.1:6381 作为 127.0.0.1:6380 的 slave节点。

点击后,会有如下Log:

17816:S 28 Jul 21:15:47.703 * SLAVE OF 127.0.0.1:6380 enabled (user request from 'id=36 addr=127.0.0.1:53494 fd=40 name= age=0 idle=0 flags=x db=0 sub=0 psub=0 multi=4 qbuf=0 qbuf-free=32768 obl=50 oll=0 omem=0 events=r cmd=exec')

17816:S 28 Jul 21:15:47.908 * Connecting to MASTER 127.0.0.1:6380

17816:S 28 Jul 21:15:47.909 * MASTER <-> SLAVE sync started

17816:S 28 Jul 21:15:47.909 * Non blocking connect for SYNC fired the event.

17816:S 28 Jul 21:15:47.909 * Master replied to PING, replication can continue...

17816:S 28 Jul 21:15:47.910 * Partial resynchronization not possible (no cached master)

17816:S 28 Jul 21:15:47.911 * Full resync from master: 0ee00b25600eef5b71196377532b70e70fdbbe5a:438

17816:S 28 Jul 21:15:48.006 * MASTER <-> SLAVE sync: receiving 87 bytes from master

17816:S 28 Jul 21:15:48.007 * MASTER <-> SLAVE sync: Flushing old data

17816:S 28 Jul 21:15:48.007 * MASTER <-> SLAVE sync: Loading DB in memory

17816:S 28 Jul 21:15:48.008 * MASTER <-> SLAVE sync: Finished with success

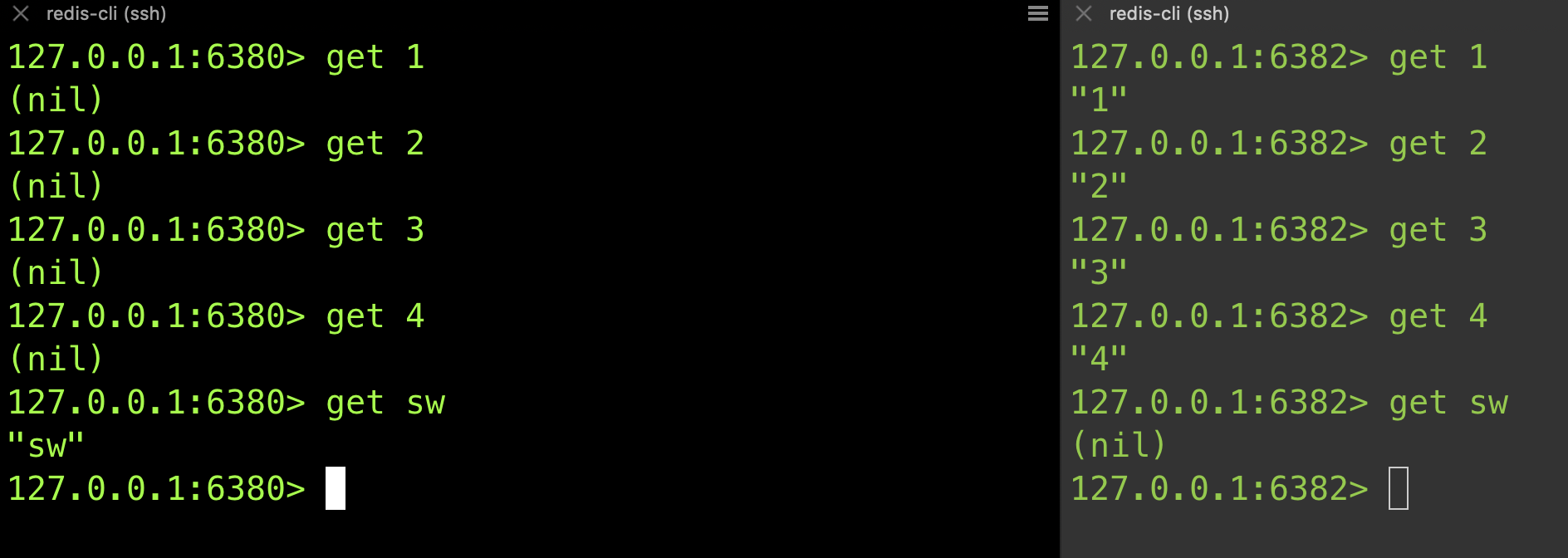

可以看到,数据会被同步:

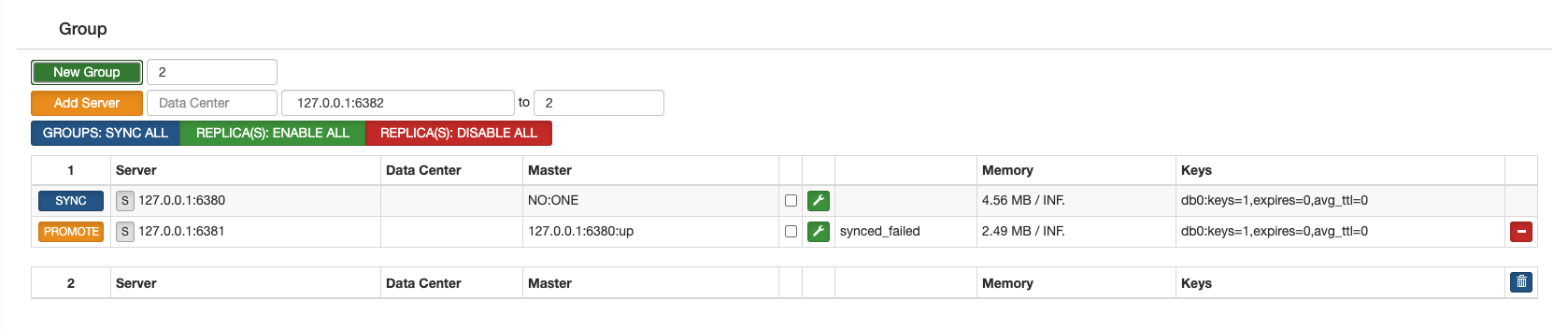

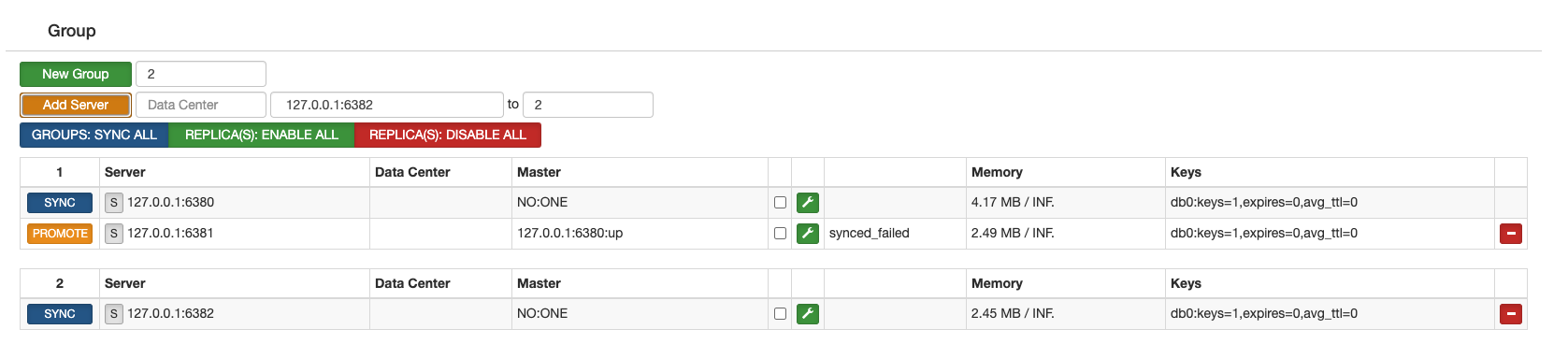

添加codis-server到一个新的codis-group

添加一个新的group,我们设为group 2:

$ ./codis-server --port 6382

...

添加该 codis-server 到 Group 2中:

这时,所以的数据还只会被存储到 Group 1,因为我们目前还是把所有的slot(slot [0, 1023])分配给了 Group 1,点击"Rebalance All Slots",以重新分配 slots:

我们随便插入一些数据以做测试:

$ 192.168.2.204:19000> set 1 1

OK

192.168.2.204:19000> set 2 2

OK

192.168.2.204:19000> set 3 3

OK

192.168.2.204:19000> set 4 4

OK

192.168.2.204:19000> set sw sw

OK

“Rebalance All Slots"可以帮我们很快的完成 cluster的横向扩展(当cluster的容量或者是CPU 成为 bottleneck时),即通过增加codis-group(每个codis-group中只包含一个 master codis-server),并将 slot 重分配到所有 Group 中(这个过程也称为"Rebalance”)。

Observation

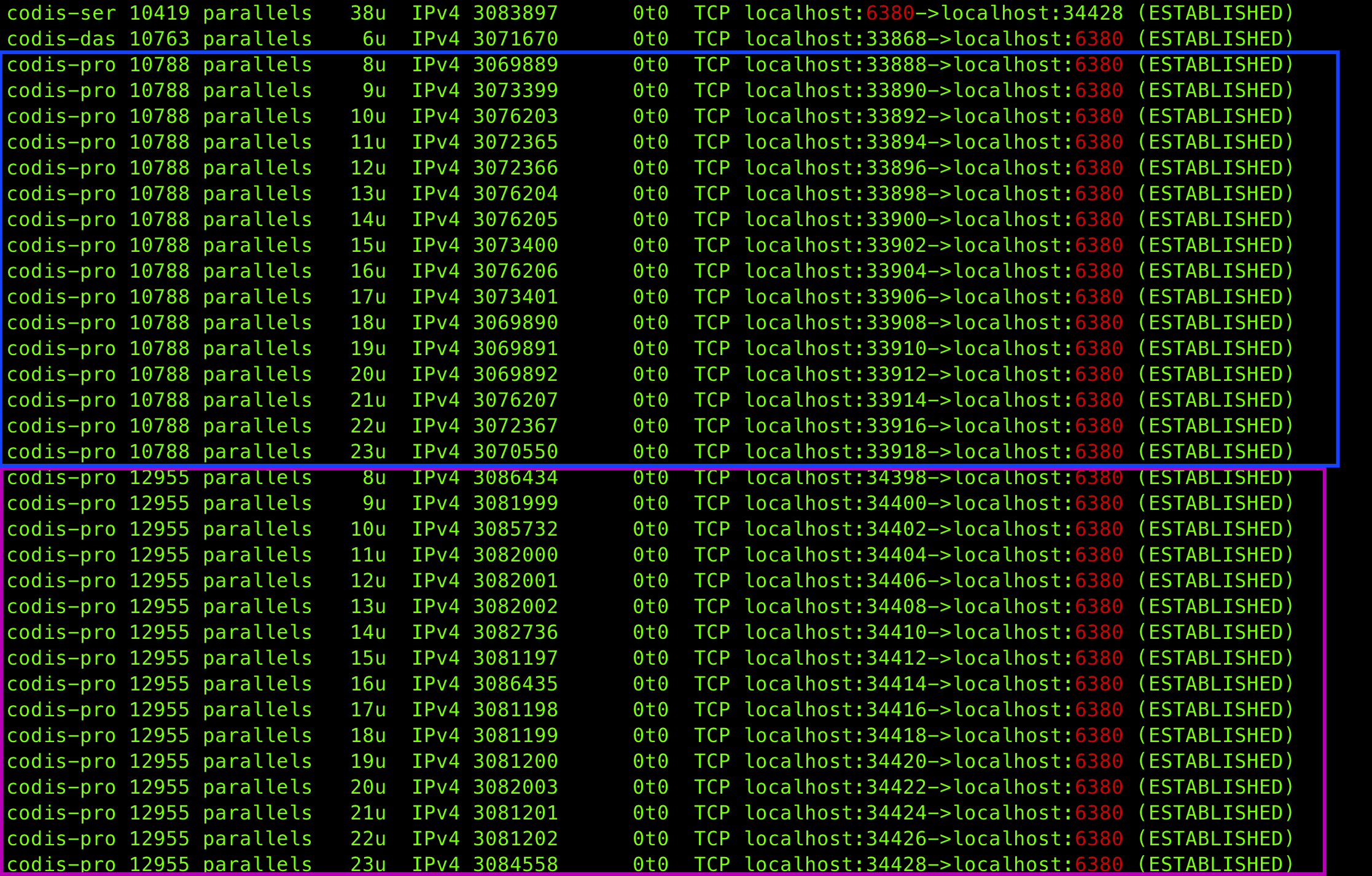

codis-proxy 到codis-server 的connection

codis-proxy 会建立多条连接到codis-server,

$ lsof -i -P | grep "codis-pr"

codis-pro 21100 parallels 8u IPv4 2250487 0t0 TCP localhost:60636->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 9u IPv4 2252281 0t0 TCP localhost:60638->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 10u IPv4 2254068 0t0 TCP localhost:60640->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 11u IPv4 2252282 0t0 TCP localhost:60642->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 12u IPv4 2252283 0t0 TCP localhost:60644->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 13u IPv4 2252284 0t0 TCP localhost:60646->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 14u IPv4 2252285 0t0 TCP localhost:60648->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 15u IPv4 2254069 0t0 TCP localhost:60650->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 16u IPv4 2252286 0t0 TCP localhost:60652->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 17u IPv4 2250488 0t0 TCP localhost:60654->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 18u IPv4 2253039 0t0 TCP localhost:60656->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 19u IPv4 2253040 0t0 TCP localhost:60658->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 20u IPv4 2250489 0t0 TCP localhost:60660->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 21u IPv4 2250490 0t0 TCP localhost:60662->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 22u IPv4 2250491 0t0 TCP localhost:60664->localhost:6379 (ESTABLISHED)

codis-pro 21100 parallels 23u IPv4 2250492 0t0 TCP localhost:60666->localhost:6379 (ESTABLISHED)

而且,可以看到,每启动一个codis-proxy,这个proxy都会和当前active的所有的codis-server建立16个connection:

master codis-server 异常时

当master挂了的时候(这里master node是127.0.0.1:6380),slave在默认情况下并不会主动promote成为master(如果设置了sentinel,则会在出现故障时,自动将 Slave Redis node 转换为 Master Redis node)。

虽然我们可以在dashboard中看到error:

同样,通过proxy 来get 数据也会有error:

192.168.2.204:19000> get 1

(error) ERR handle response, backend conn reset

master codis-server 增加后

当 master codis-server 增加后,如预期的,codis-proxy会自动和这个 master codis-server 建立连接,以下是在增加了 127.0.0.1:6382 节点之后,且在"rebalance"之后:

$ lsof -i -P | grep "codis-pro" | grep 12955 | grep 6382 | wc -l

16

错误

Codis异常关闭后启动出错

Situation

2020/07/27 21:25:06 main.go:171: [WARN] [0xc4202d1680] dashboard online failed [15]

2020/07/27 21:25:08 topom.go:189: [ERROR] store: acquire lock of codis-demo failed

[error]: zk: node already exists

6 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/models/zk/zkclient.go:247

github.com/CodisLabs/codis/pkg/models/zk.(*Client).create

5 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/models/zk/zkclient.go:196

github.com/CodisLabs/codis/pkg/models/zk.(*Client).Create.func1

4 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/models/zk/zkclient.go:129

github.com/CodisLabs/codis/pkg/models/zk.(*Client).shell

3 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/models/zk/zkclient.go:198

github.com/CodisLabs/codis/pkg/models/zk.(*Client).Create

2 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/models/store.go:119

github.com/CodisLabs/codis/pkg/models.(*Store).Acquire

1 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/topom/topom.go:188

github.com/CodisLabs/codis/pkg/topom.(*Topom).Start

0 /home/travis/gopath/src/github.com/CodisLabs/codis/cmd/dashboard/main.go:169

main.main

... ...

[stack]:

1 /home/travis/gopath/src/github.com/CodisLabs/codis/pkg/topom/topom.go:189

github.com/CodisLabs/codis/pkg/topom.(*Topom).Start

0 /home/travis/gopath/src/github.com/CodisLabs/codis/cmd/dashboard/main.go:169

main.main

... ...

2020/07/27 21:25:08 main.go:173: [PANIC] dashboard online failed, give up & abort :'(

[stack]:

0 /home/travis/gopath/src/github.com/CodisLabs/codis/cmd/dashboard/main.go:173

main.main

... ...

codis-dashboard 异常退出的修复

当 codis-dashboard 启动时,会在外部存储上存放一条数据,用于存储 dashboard 信息,同时作为 LOCK 存在。当 codis-dashboard 安全退出时,会主动删除该数据。当 codis-dashboard 异常退出时,由于之前 LOCK 未安全删除,重启往往会失败。因此 codis-admin 提供了强制删除工具:

- 确认 codis-dashboard 进程已经退出(很重要);

- 运行 codis-admin 删除 LOCK:

$ ./codis-admin --remove-lock --product=<product name> --zookeeper=<zk address>

# e.g., ./codis-admin --remove-lock --product=codis-demo --zookeeper=127.0.0.1:2181

codis-proxy 异常退出的修复

通常 codis-proxy 都是通过 codis-dashboard 进行移除,移除过程中 codis-dashboard 为了安全会向 codis-proxy 发送 offline 指令,成功后才会将 proxy 信息从外部存储中移除。如果 codis-proxy 异常退出,该操作会失败。此时可以使用 codis-admin 工具进行移除:

- 确认 codis-proxy 进程已经退出(很重要);

- 运行 codis-admin 删除 proxy:

$ ./bin/codis-admin --dashboard=127.0.0.1:18080 --remove-proxy --addr=127.0.0.1:11080 --force

选项 --force 表示,无论 offline 操作是否成功,都从外部存储中将该节点删除。所以操作前,一定要确认该 codis-proxy 进程已经退出。

操作

移除一个 group

$ codis-admin --dashboard=codis-dashboard:18080 --group-del --gid=1 --addr=172.17.0.14:6379

当然也可以通过codis-fe来操作:

使所有slot是offline状态

要保证所有slot是offline状态,让所有slot offline命令如下:

$ ./codis-admin --dashboard=0.0.0.0:18080 --slots-assign --beg=0 --end=1023 --offline --confirm

# 然后再移除这个 group

$ codis-admin --dashboard=codis-dashboard:18080 --group-del --gid=1 --addr=172.17.0.14:6379

总结

-

Codis 最大的优点,就是当cluster的容量或者是CPU 成为 bottleneck时,Codis可以帮我们很快的完成 cluster的横向扩展(通过 “Rebalance All Slots”),即通过增加codis-group(每个codis-group中只包含一个 master codis-server),并将 slot 重分配到所有 Group 中(这个过程也称为"Rebalance")。

-

每个codis-group中只包含一个 master codis-server,当然可以包含0或多个 slave codis-server

Reference

- https://github.com/CodisLabs/codis/blob/release3.2/doc/tutorial_zh.md

- https://blog.csdn.net/u010383937/article/details/83987875

- https://github.com/CodisLabs/codis/issues/1631