Background

Why Service Mesh

People write very sophisticated applications and services using higher level protocols like HTTP without even thinking about how TCP controls the packets on their network. This situation is what we need for microservices, where engineers working on services can focus on their business logic and avoid wasting time in writing their own services infrastructure code or managing libraries and frameworks across the whole fleet.

Service-to-service communication is what makes microservices possible. The logic governing communication can be coded into each service without a service mesh layer—but as communication gets more complex, a service mesh becomes more valuable. For cloud-native apps built in a microservices architecture, a service mesh is a way to comprise a large number of discrete services into a functional application.

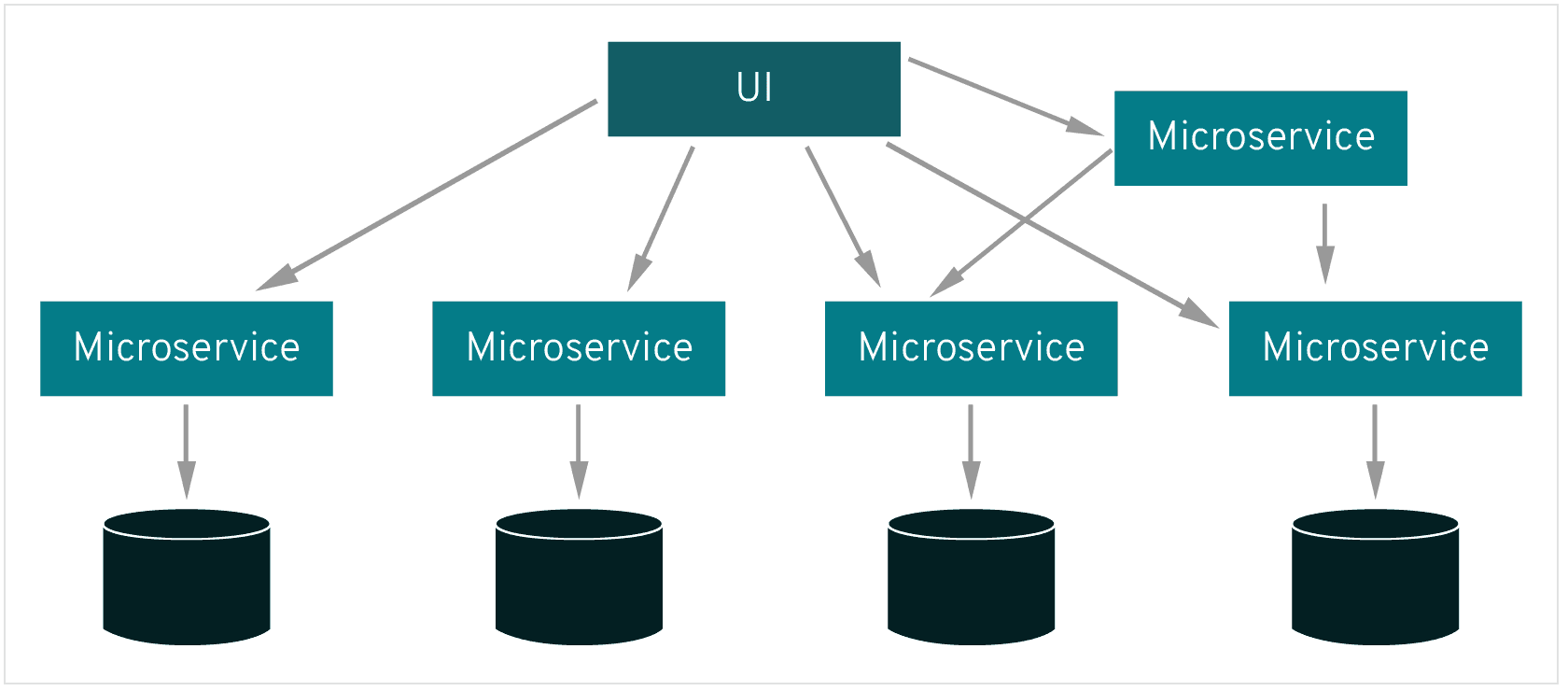

Traditional Microservice Architecture

A microservices architecture lets developers make changes to an app’s services without the need for a full redeploy. Unlike app development in other architectures, individual microservices are built by small teams with the flexibility to choose their own tools and coding languages. Basically, microservices are built independently, communicate with each other, and can individually fail without escalating into an application-wide outage.

Service-to-service communication is what makes microservices possible. The logic governing communication can be coded into each service without a service mesh layer—but as communication gets more complex, a service mesh becomes more valuable. For cloud-native apps built in a microservices architecture, a service mesh is a way to comprise a large number of discrete services into a functional application.

Proxy

A service mesh doesn’t introduce new functionality to an app’s runtime environment—apps in any architecture have always needed rules to specify how requests get from point A to point B. What’s different about a service mesh is that it takes the logic governing service-to-service communication out of individual services and abstracts it to a layer of infrastructure.

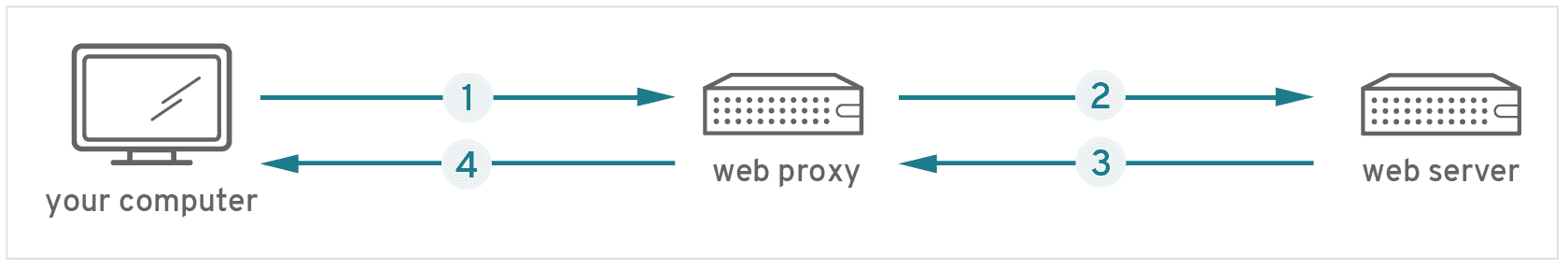

To do this, a service mesh is built into an app as an array of network proxies. Proxies are a familiar concept in enterprise IT—if you are accessing this webpage from a work computer, there’s a good chance you just used one:

- As your request for this page went out, it was first received by your company’s web proxy…

- After passing the proxy’s security measure, it was sent to the server that hosts this page…

- Next, this page was returned to the proxy and again checked against its security measures…

- And then it was finally sent from the proxy to you.

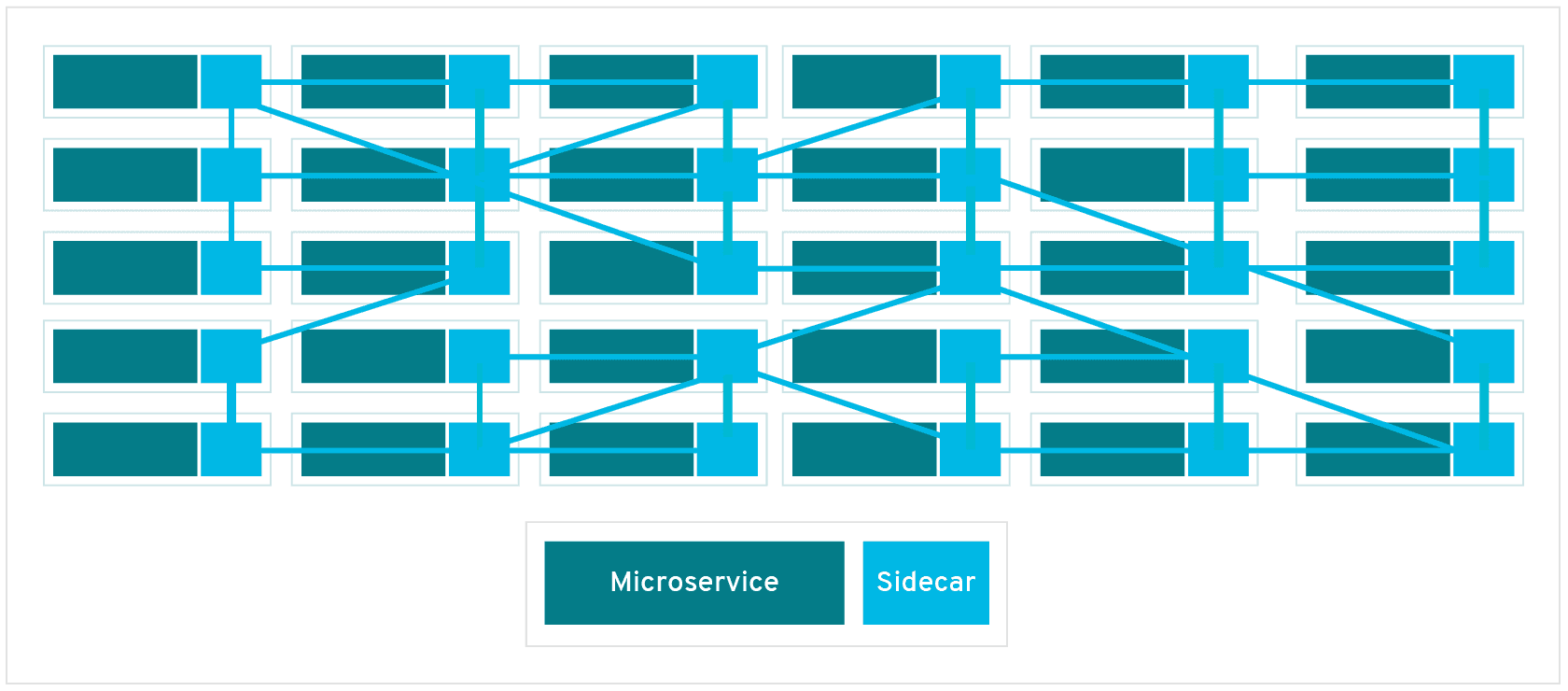

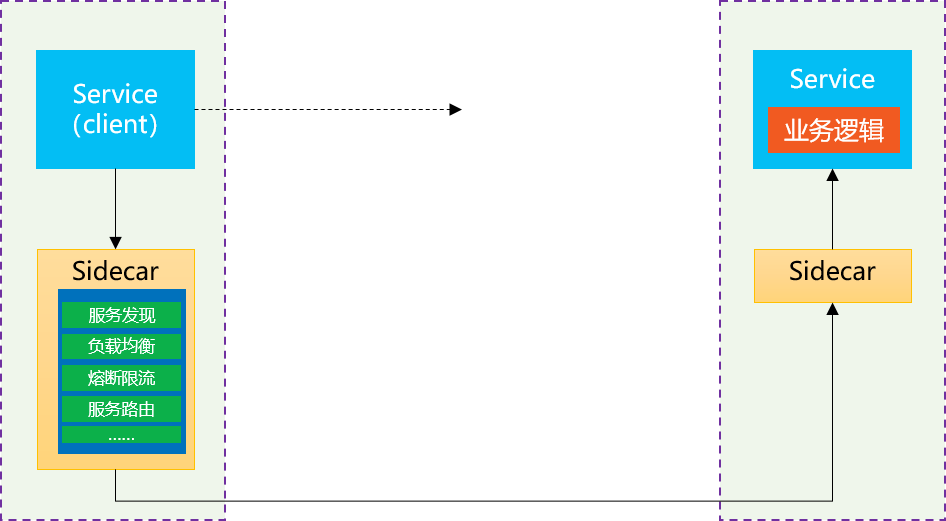

In a service mesh, requests are routed between microservices through proxies in their own infrastructure layer. For this reason, individual proxies that make up a service mesh are sometimes called “sidecars,” since they run alongside each service, rather than within them. Taken together, these “sidecar” proxies—decoupled from each service—form a mesh network.

WHAT IS A SERVICE MESH?

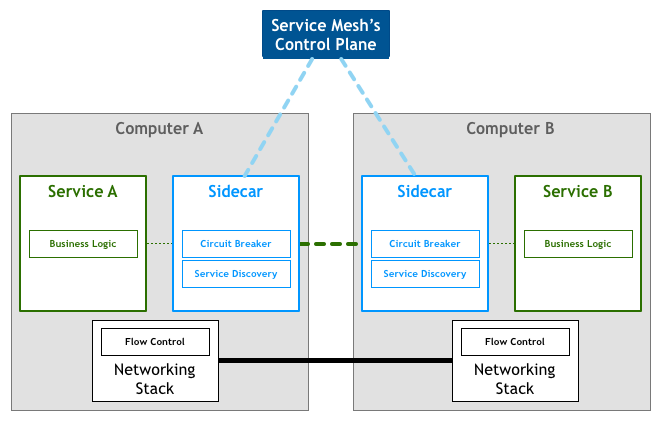

A service mesh is a dedicated infrastructure layer for handling service-to-service communication. It’s responsible for the reliable delivery of requests through the complex topology of services that comprise a modern, cloud native application. In practice, the service mesh is typically implemented as an array of lightweight network proxies that are deployed alongside application code, without the application needing to be aware.

将 Service Mesh 类比做TCP/IP

The service mesh is a networking model that sits at a layer of abstraction above TCP/IP. It assumes that the underlying L3/L4 network is present and capable of delivering bytes from point to point. (It also assumes that this network, as with every other aspect of the environment, is unreliable; the service mesh must therefore also be capable of handling network failures.)

In some ways, the service mesh is analogous to TCP/IP. Just as the TCP stack abstracts the mechanics of reliably delivering bytes between network endpoints, the service mesh abstracts the mechanics of reliably delivering requests between services. Like TCP, the service mesh doesn’t care about the actual payload or how it’s encoded. The application has a high-level goal (“send something from A to B”), and the job of the service mesh, like that of TCP, is to accomplish this goal while handling any failures along the way.

Unlike TCP, the service mesh has a significant goal beyond “just make it work”: it provides a uniform, application-wide point for introducing visibility and control into the application runtime. The explicit goal of the service mesh is to move service communication out of the realm of the invisible, implied infrastructure, and into the role of a first-class member of the ecosystem—where it can be monitored, managed and controlled.

WHAT DOES A SERVICE MESH ACTUALLY DO?

- circuit-breaking,

- latency-aware load balancing

- eventually consistent (“advisory”) service discovery

- retries

- deadlines

Service Mesh的优缺点

Service Mesh的优点

- ServiceMesh的多语言支持和应用无感知升级

- 无侵入的为应用引入各种高级特性如流量控制,安全,可观测性

- 为非业务逻辑相关的功能下沉到基础设施提供可能,帮助应用轻量化,使之专注于业务,进而实现应用云原生化。

Service Mesh的缺点

性能问题

一个明显的问题,是在传统的微服务中,A Service 调用 B Service只有一次RPC,而当引入Service Mesh 后,这样一次远程调用就会变成三次远程调用,对性能的担忧也就自然而然的产生了:一次远程调用变三次远程调用,性能会下降多少?延迟会增加多少?

ServiceMesh实现的基本思路

我们来快速回顾一下ServiceMesh实现的基本思路:

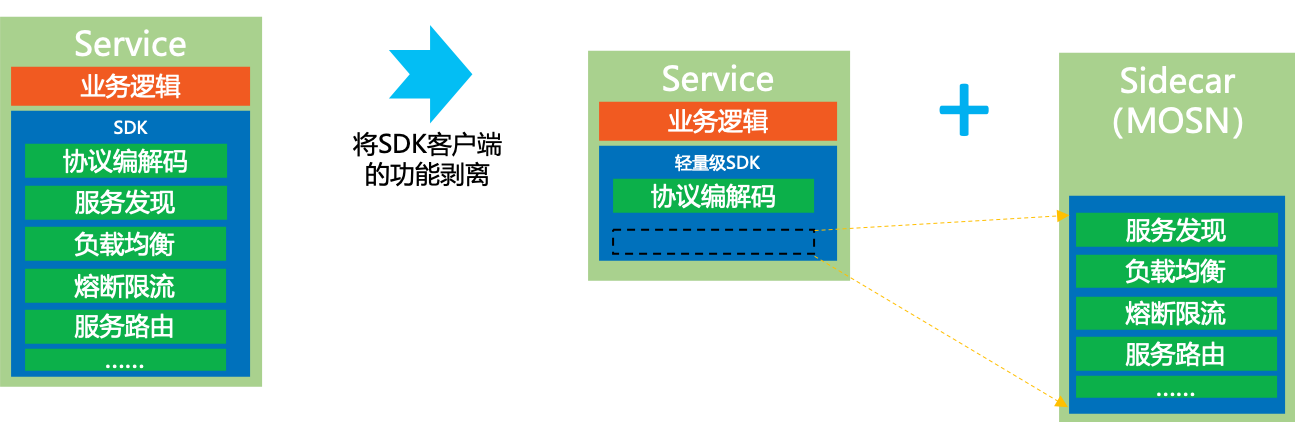

在基于SDK的方案中,应用既有业务逻辑,也有各种非业务功能。虽然通过SDK实现了代码重用,但是在部署时,这些功能还是混合在一个进程内的。

在ServiceMesh中,我们将SDK客户端的功能从应用中剥离出来,拆解为独立进程,以Sidecar的模式部署,让业务进程专注于业务逻辑:

- 业务进程:专注业务实现;无需感知Mesh

- Sidecar进程:专注服务间通讯和相关能力;与业务逻辑无关

我们称之为”关注点分离“:业务开发团队可以专注于业务逻辑,而底层的中间件团队(或者基础设施团队)可以专注于业务逻辑之外的各种通用功能。

通过Sidecar拆分为两个独立进程之后,业务应用和Sidecar就可以实现“独立维护” :我们可以单独更新/升级业务应用或者Sidecar。

性能数据背后的情景分析

我们回到前面的蚂蚁金服ServiceMesh落地后的性能对比数据:从原理上说,Sidecar拆分之后,原来SDK中的各种功能只是拆分到Sidecar中。整体上并没有增减,因此理论上说SDK和Sidecar性能表现是一致的。由于增加了应用和Sidecar之间的远程调用,性能不可避免的肯定要受到影响。

首先我们来解释第一个问题:为什么性能损失那么小,和”一次远程调用变三次远程调用”的直觉不符?

所谓的“直觉”,是将关注点都集中到了远程调用开销上,下意识的忽略了其他开销,比如SDK的开销、业务逻辑处理的开销。

但是,真实世界中的应用不是这样:

- 业务逻辑的占比很高:Sidecar转发的资源消耗相比之下要低很多,通常是十倍百倍甚至千倍的差异。

- SDK也是有消耗的:即使不考虑各种复杂的功能特性,仅仅就报文(尤其是body)序列化的编解码开销也是不低的。而且,客户端和服务器端原有的编解码过程是需要处理Body的,而在Sidecar中,通常都只是读取Header而透传Body,因此在编解码上要快很多。另外应用和Sidecar的两次远程通讯,都是走的Localhost而不是真实的网络,速度也要快非常多。

实现 Service Mesh 的框架

Linkerd

业界第一个Service Mesh 的框架。

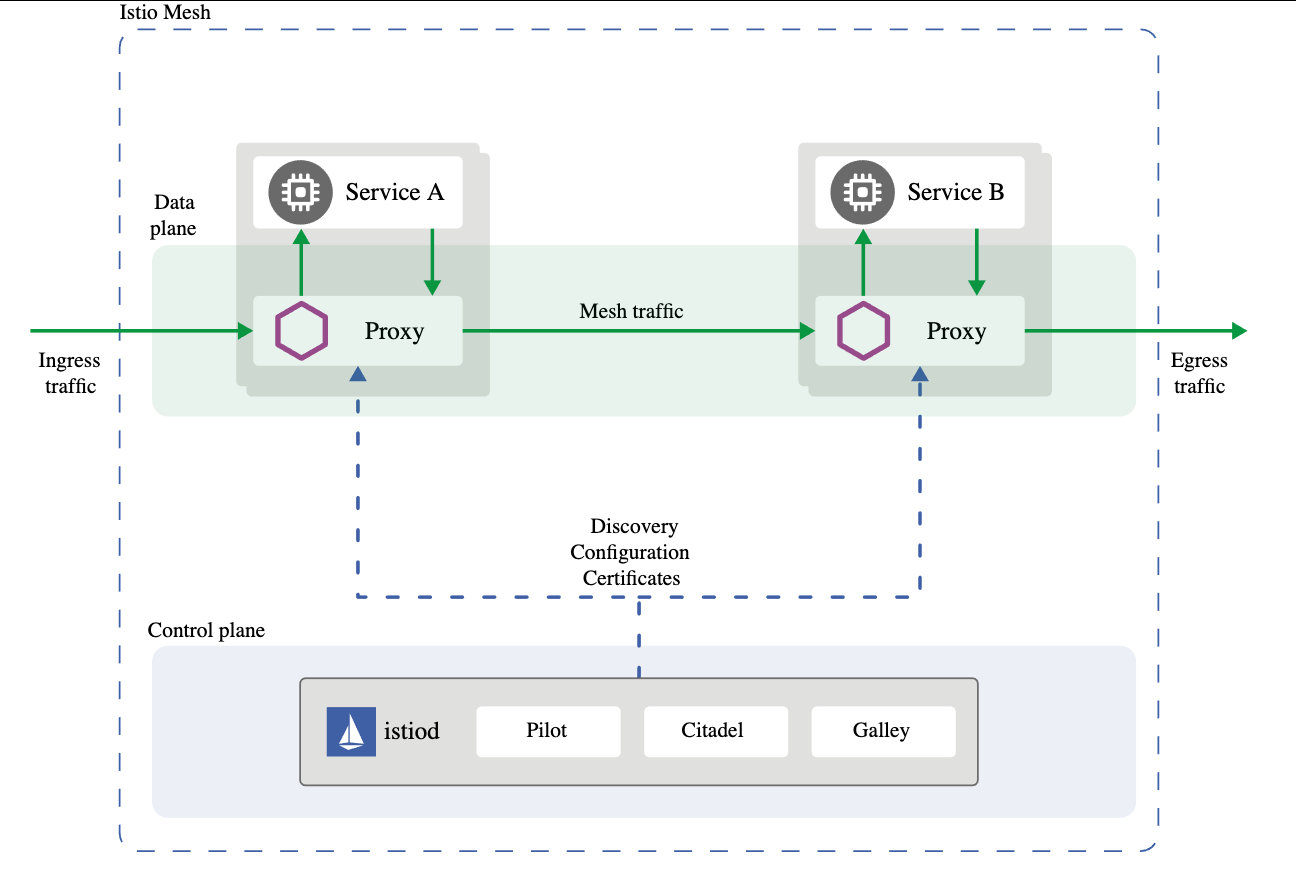

Istio

Isito从架构,从功能上,比Linkerd和Envoy是上了一个层次

SOFAMosn - from 蚂蚁金服

Reference

- https://servicemesh.io/

- https://philcalcado.com/2017/08/03/pattern_service_mesh.html

- 业界第一篇详细介绍Service Mesh理念的文章 - https://buoyant.io/2017/04/25/whats-a-service-mesh-and-why-do-i-need-one/

- https://www.redhat.com/en/topics/microservices/what-is-a-service-mesh

- https://istio.io/latest/docs/concepts/what-is-istio/

- https://istio.io/latest/docs/ops/deployment/architecture/

- https://cizixs.com/2018/08/26/what-is-istio/

- 蚂蚁金服Service Mesh深度实践 - https://skyao.io/talk/201910-ant-finance-service-mesh-deep-practice/