Transactions

A transaction is a way for an application to group several reads and writes together into a logical unit. Conceptually, all the reads and writes in a transaction are executed as one operation: either the entire transaction succeeds (commit) or it fails (abort, rollback). If it fails, the application can safely retry. With transactions, error handling becomes much simpler for an application, because it doesn’t need to worry about partial failure—i.e., the case where some operations succeed and some fail (for whatever reason).

ACID

A transaction is a single logical unit of work which accesses and possibly modifies the contents of a database. Transactions access data using read and write operations.

In computer science, ACID (atomicity, consistency, isolation, durability) is a set of properties of database transactions intended to guarantee data validity despite errors, power failures, and other mishaps. In the context of databases, a sequence of database operations that satisfies the ACID properties (which can be perceived as a single logical operation on the data) is called a transaction.

For example, a transfer of funds from one bank account to another, even involving multiple changes such as debiting one account and crediting another, is a single transaction.

Atomicity

Transactions are often composed of multiple statements. Atomicity guarantees that each transaction is treated as a single “unit”, which either succeeds completely, or fails completely: if any of the statements constituting a transaction fails to complete, the entire transaction fails and the database is left unchanged. An atomic system must guarantee atomicity in each and every situation, including power failures, errors and crashes.

A guarantee of atomicity prevents updates to the database occurring only partially, which can cause greater problems than rejecting the whole series outright. As a consequence, the transaction cannot be observed to be in progress by another database client. At one moment in time, it has not yet happened, and at the next it has already occurred in whole (or nothing happened if the transaction was cancelled in progress).

An example of an atomic transaction is a monetary transfer from bank account A to account B. It consists of two operations, withdrawing the money from account A and saving it to account B. Performing these operations in an atomic transaction ensures that the database remains in a consistent state, that is, money is neither debited nor credited if either of those two operations fail.

Example

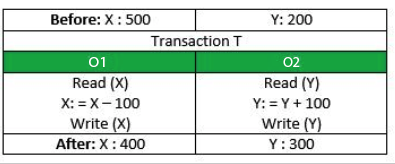

Consider the following transaction T consisting of O1 and O2: Transfer of 100 from account X to account Y.

If the transaction fails after completion of O1 but before completion of O2.( say, after write(X) but before write(Y)), then amount has been deducted from X but not added to Y.

This results in an inconsistent database state. Therefore, the transaction must be executed in entirety in order to ensure correctness of database state.

Consistency

Consistency ensures that a transaction can only bring the database from one valid state to another, maintaining database invariants: any data written to the database must be valid according to all defined rules, including constraints, cascades, triggers, and any combination thereof. This prevents database corruption by an illegal transaction, but does not guarantee that a transaction is correct.

For example, if there ia an integrity constraint that requires that the value in A and the value in B must sum to 100. This integrity constraint should always be valid before and after a transaction is executed.

Consistency is checked after each transaction, it is known that A + B = 100 before the transaction begins. If the transaction removes 10 from A successfully, atomicity will be achieved. However, a validation check will show that A + B = 90, which is inconsistent with the rules of the database. The entire transaction must be cancelled and the affected rows rolled back to their pre-transaction state.

Isolation

Transactions are often executed concurrently (e.g., reading and writing to multiple tables at the same time). Isolation ensures that concurrent execution of transactions leaves the database in the same state that would have been obtained if the transactions were executed sequentially. In other words, isolation ensures that multiple transactions can occur concurrently without leading to inconsistency of database state.

Isolation is the main goal of concurrency control; depending on the method used, the effects of an incomplete transaction might not even be visible to other transactions.

Two phase locking is often applied to guarantee full isolation.

The classic database textbooks formalize isolation as serializability, which means that each transaction can pretend that it is the only transaction running on the entire database. The database ensures that when the transactions have committed, the result is the same as if they had run serially (one after another), even though in reality they may have run concurrently.

However, in practice, serializable isolation is rarely used, because it carries a performance penalty. Some popular databases, such as Oracle 11g, don’t even implement it. In Oracle there is an isolation level called “serializable,” but it actually implements something called snapshot isolation, which is a weaker guarantee than serializability.

Example of using Isolation

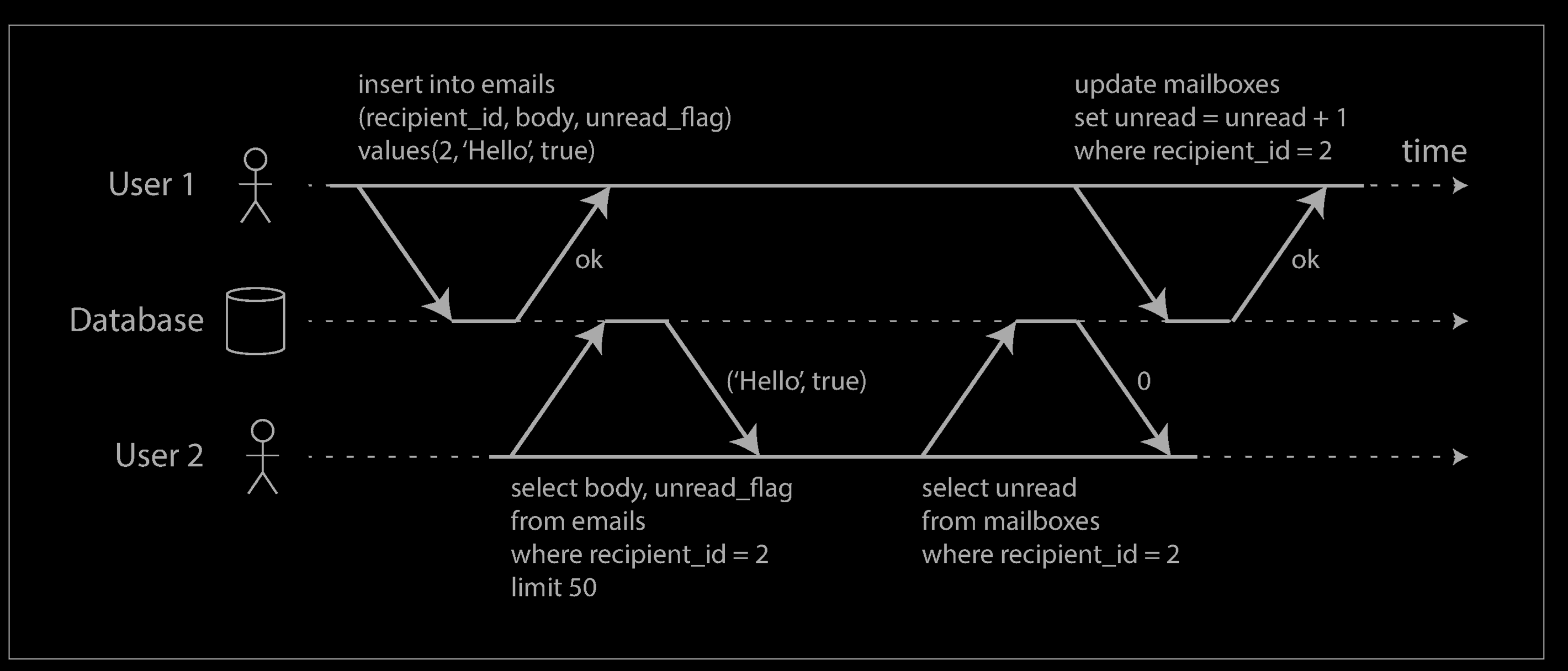

User 2 experiences an anomaly: the mailbox listing shows an unread message, but the counter shows zero unread messages because the counter increment has not yet happened. Isolation would have prevented this issue by ensuring that user 2 sees either both the inserted email and the updated counter, or neither, but not an inconsistent halfway point.

Durability

Durability guarantees that once a transaction has been committed, it will remain committed even in the case of a hardware fault or the database crashes (e.g., power outage or crash). This usually means that completed transactions (or their effects) are recorded in a persisted transaction log.

If our system is suddenly affected by a system crash or a power outage, then all unfinished committed transactions may be replayed.

Consider a transaction that transfers 10 from A to B. First it removes 10 from A, then it adds 10 to B. At this point, the user is told the transaction was a success, however the changes are still queued in the disk buffer waiting to be committed to disk. Power fails and the changes are lost. The user assumes (understandably) that the changes persist.

Achieve ACID

Locking

Many databases rely upon locking to provide ACID capabilities.

Locking means that the transaction marks the data that it accesses so that the DBMS knows not to allow other transactions to modify it until the first transaction succeeds or fails. The lock must always be acquired before processing data, including data that is read but not modified.

Non-trivial transactions typically require a large number of locks, resulting in substantial overhead as well as blocking other transactions.

For example, if user A is running a transaction that has to read a row of data that user B wants to modify, user B must wait until user A’s transaction completes. Two phase locking is often applied to guarantee full isolation.

Multiversion Concurrency Control

An alternative to locking is multiversion concurrency control, in which the database provides each reading transaction the prior, unmodified version of data that is being modified by another active transaction.

This allows readers to operate without acquiring locks, i.e., writing transactions do not block reading transactions, and readers do not block writers.

Going back to the example, when user A’s transaction requests data that user B is modifying, the database provides A with the version of that data that existed when user B started his transaction. User A gets a consistent view of the database even if other users are changing data. One implementation, namely snapshot isolation, relaxes the isolation property.

Challanges in ACID

ACID is old school. Jim Gray described atomicity, consistency and durability long before I was even born. But that particular paper doesn’t mention anything about isolation. This is understandable if we think of the production systems of the late 70’s, which according to Jim Gray:

“At present, the largest airlines and banks have about 10,000 terminals and about 100 active transactions at any instant”.

So all efforts were spent on delivering correctness rather than concurrency. Things have changed drastically ever since, and nowadays even modest set-ups are able to run 1000 TPS.

From a database perspective, the atomicity is a fixed property, but everything else may be traded off for performance/scalability reasons.

If the database system is composed of multiple nodes, then distributed system consistency (C in CAP Theorem not C in ACID) mandates that all changes be propagated to all nodes (multi-master replication). If slaves nodes are updated asynchronously then we break the consistency rule, the system becoming “eventually consistent“.

Reference

- https://en.wikipedia.org/wiki/ACID

- Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems

- A beginner’s guide to ACID and database transactions - https://vladmihalcea.com/a-beginners-guide-to-acid-and-database-transactions/