Out of memory (OOM)

Out of memory (OOM) is an often undesired state of computer operation where no additional memory can be allocated for use by programs or the operating system. Such a system will be unable to load any additional programs, and since many programs may load additional data into memory during execution, these will cease to function correctly. This usually occurs because all available memory, including disk swap space, has been allocated.

OOM Killer

Before deciding to kill a process, it goes through the following checklist.

- Is there enough swap space left (

nr_swap_pages> 0) ? If yes, not OOM - Has it been more than 5 seconds since the last failure? If yes, not OOM

- Have we failed within the last second? If no, not OOM

- If there hasn’t been 10 failures at least in the last 5 seconds, we’re not OOM

- Has a process been killed within the last 5 seconds? If yes, not OOM

It is only if the above tests are passed that oom_kill() is called to select a process to kill.

Selecting a Process

The function select_bad_process() is responsible for choosing a process to kill. It decides by stepping through each running task and calculating how suitable it is for killing with the function badness(). The badness is calculated as follows, note that the square roots are integer approximations calculated with int_sqrt();

badness_for_task = total_vm_for_task / (sqrt(cpu_time_in_seconds) *

sqrt(sqrt(cpu_time_in_minutes)))

This has been chosen to select a process that is using a large amount of memory but is not that long lived. Processes which have been running a long time are unlikely to be the cause of memory shortage so this calculation is likely to select a process that uses a lot of memory but has not been running long. If the process is a root process or has CAP_SYS_ADMIN capabilities, the points are divided by four as it is assumed that root privilege processes are well behaved. Similarly, if it has CAP_SYS_RAWIO capabilities (access to raw devices) privileges, the points are further divided by 4 as it is undesirable to kill a process that has direct access to hardware.

配置 OOM Killer

通过一些内核参数来调整 OOM killer 的行为,避免系统在那里不停的杀进程。比如我们可以在触发 OOM 后立刻触发 kernel panic,kernel panic 10秒后自动重启系统。

echo "vm.panic_on_oom=1" >> /etc/sysctl.conf

echo "kernel.panic=10" >> /etc/sysctl.conf

sysctl -p

关闭/打开oom-killer(慎用)

echo "0" > /proc/sys/vm/oom-kill

echo "1" > /proc/sys/vm/oom-kill

How to Know

To facilitate this, the kernel maintains an oom_score for each of the processes. You can see the oom_score of each of the processes in the /proc filesystem under the pid directory.

$ cat /proc/10292/oom_score

The higher the value of oom_score of any process, the higher is its likelihood of getting killed by the OOM Killer in an out-of-memory situation.

$ dmesg -T | grep -i "killed process"

Additionally, the below can be used to look through the OS Distribution Specific Logs;

#CentOS

$ grep -i "out of memory" /var/log/messages

#Debian / Ubuntu

$ grep -i "out of memory" /var/log/kern.log

The output will look like something like this,

host kernel: Out of Memory: Killed process 2592 (mysql).

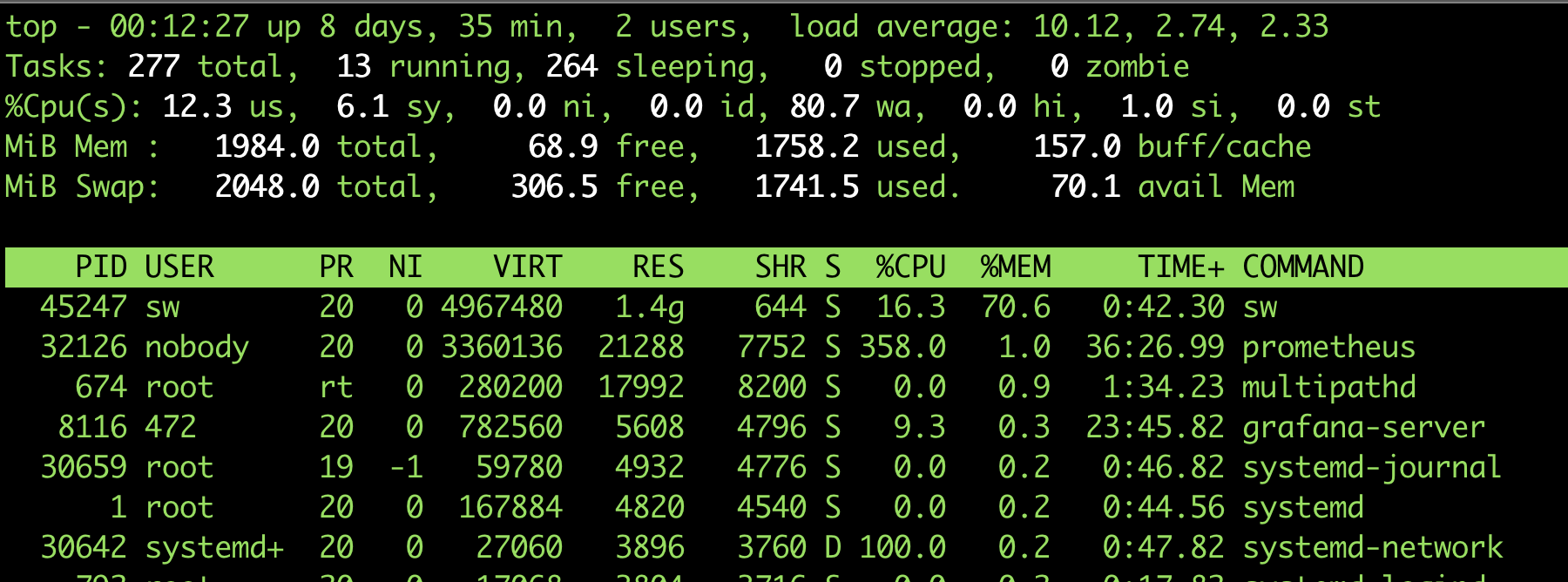

Demo

我们来模拟一次OOM的情况

$ docker run -d -it --rm --name my-running-app golang

$ docker exec -it my-running-app bash

root@90bdf5d0a510:/go#apt update

root@90bdf5d0a510:/go#apt install vim

root@90bdf5d0a510:/go# ls

root@90bdf5d0a510:/go# cd src/

root@90bdf5d0a510:/go/src# vim sw.go

# 启动

root@90bdf5d0a510:/go/src# go run sw.go

复制以下内容:

package main

import (

"net/http"

_ "net/http/pprof"

)

const (

KB = 1024

MB = 1024 * KB

GB = 1024 * MB

)

type StupidStruct struct {

buffer [][MB]byte

}

var m = StupidStruct{}

func main() {

go func() {

stupidMemoryUse()

}()

http.ListenAndServe("0.0.0.0:6060", nil)

}

func stupidMemoryUse() {

max := 20 * GB

for len(m.buffer)*MB < max {

m.buffer = append(m.buffer, [MB]byte{})

}

}

这个程序会在heap中申请一个20GB的空间,并且不释放。

$ dmesg -T | grep -i "killed process"

[Tue Jul 13 00:32:19 2021] Out of memory: Killed process 45626 (sw) total-vm:4893492kB, anon-rss:1501628kB, file-rss:1384kB, shmem-rss:0kB, UID:1000 pgtables:6764kB oom_score_adj:0

$ grep -i "out of memory" /var/log/kern.log

Jul 12 16:24:01 ubuntusw kernel: [694496.801345] Out of memory: Killed process 45626 (sw) total-vm:4893492kB, anon-rss:1501628kB, file-rss:1384kB, shmem-rss:0kB, UID:1000 pgtables:6764kB oom_score_adj:0

同时使用

$ vmstat -S M -w 1

procs -----------------------memory---------------------- ---swap-- -----io---- -system-- --------cpu--------

r b swpd free buff cache si so bi bo in cs us sy id wa st

1 0 190 1338 7 217 0 0 1 3 0 1 0 0 100 0 0

0 0 190 1336 7 217 0 0 8 0 369 440 0 0 100 0 0

0 0 190 1336 7 217 0 0 8 0 356 442 0 0 100 0 0

0 0 190 1336 7 217 0 0 148 0 551 734 0 0 100 0 0

12 3 190 1322 8 225 0 0 9380 76 2279 2724 0 10 82 8 0

11 10 190 1318 9 227 0 0 1748 48 1203 1121 0 19 53 27 0

13 9 190 1318 9 228 0 0 512 0 383 343 1 9 25 66 0

12 11 190 1317 9 229 0 0 488 0 731 780 1 10 50 38 0

17 6 190 1313 9 232 0 0 268 0 563 339 0 20 60 19 0

15 8 190 1313 9 233 0 0 200 0 256 667 0 5 28 66 0

11 13 189 1312 9 233 0 0 192 12 259 314 0 6 55 39 0

12 0 189 1283 9 253 0 0 21588 0 5602 7518 3 7 71 19 0

2 0 189 632 9 310 0 0 52236 0 15673 6057 4 5 87 3 0

3 0 524 63 0 132 0 337 21412 345880 58341 2194 4 11 85 0 0

2 0 655 83 0 132 0 130 26488 133932 56431 1876 4 10 86 0 0

2 0 796 76 0 134 0 141 16308 144904 55946 1977 4 10 86 0 0

2 0 974 86 0 126 61 177 71972 181896 73343 6604 5 13 82 1 0

5 2 1077 63 0 125 54 150 60920 154468 61452 5877 5 11 80 5 0

2 2 1361 53 0 133 16 335 44140 344000 55196 9078 2 16 82 1 0

1 0 1591 83 0 128 96 341 121924 349392 33096 13593 0 11 86 2 0

2 1 1959 76 0 116 122 273 150156 280200 27315 17005 1 9 88 2 0

2 1 2047 57 0 114 152 232 185656 238100 28421 18406 0 9 88 3 0

14 9 280 1559 0 115 13 20 29645 21092 4879 3406 0 5 69 27 0

11 11 280 1549 0 126 0 0 11756 28 934 1093 0 7 36 56 0

12 24 280 1538 0 134 1 0 10290 0 933 1149 0 4 8 88 0

可以看到,

- Swap 和空闲内存越来越少,最后越来越多(因为触发了OOM Killer)

- bi(每秒从磁盘交换区写到内存的块的数量)和 bo (每秒写入磁盘交换区的块的数量) 长期不等于0,说明内存不足

- wa(等待 IO 时间) 很高,说明大量 I/O操作阻塞了 CPU 时间片(同时,us+sy非常低,使用CPU的利用率不高)

- si(每秒从交换区写到内存的大小)和 so(每秒写入交换区的内存大小)长期不为0,也说明了内存不足

Reference

- https://en.wikipedia.org/wiki/Out_of_memory

- https://www.kernel.org/doc/gorman/html/understand/understand016.html

- https://docs.memset.com/other/linux-s-oom-process-killer

- https://www.oracle.com/technical-resources/articles/it-infrastructure/dev-oom-killer.html

- https://unix.stackexchange.com/questions/153585/how-does-the-oom-killer-decide-which-process-to-kill-first