System Level - FD Limits

file-max is the maximum file descriptors (FD) enforced on a kernel level, which cannot be surpassed by all processes.

Use the following command command to display maximum number of open file descriptors:

$ cat /proc/sys/fs/file-max

9223372036854775807

# or

$ sysctl fs.file-max

fs.file-max = 9223372036854775807

9223372036854775807 files normal user can have open in single login session.

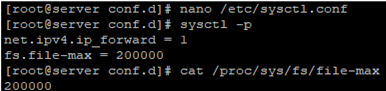

To change the general value for the system /proc/sys/fs/file-max, change the fs.file-max value in /etc/sysctl.conf:

$ fs.file-max = 100000

And apply it:

$ sysctl -p

User Level - FD Limits

You can limit httpd (or any other users) user to specific limits by editing /etc/security/limits.conf file, enter:

# vi /etc/security/limits.conf

Set httpd user soft and hard limits as follows:

httpd soft nofile 4096

httpd hard nofile 10240

Current Session/Shell - Change the Open File Limit

Once you’ve increased the system level’s FD limit (under /etc/sysctl.conf), the kernel itself will have a maximum number of files but the shell might not. And since most processes that will take up this many files are going to be initiated by the shell you’re gonna want to increase that.

To change maximum open file limits for your terminal session, run this command:

$ ulimit -n 3000

After closing the terminal and creating a new session, the limits will get back to the original values specified in /etc/security/limits.conf.

Save and close the file. To see limits, enter:

# su - httpd

$ ulimit -Hn

$ ulimit -Sn

There are both “hard” and the “soft” ulimit s that affect MongoDB’s performance. The “hard” ulimit refers to the maximum number of processes that a user can have active at any time. This is the ceiling: no non-root process can increase the “hard” ulimit. In contrast, the “soft” ulimit is the limit that is actually enforced for a session or process, but any process can increase it up to “hard” ulimit maximum.

# Get hard limit for the number of simultaneously opened files:

$ ulimit -H -n

1048576

# Get soft limit for the number of simultaneously opened files:

$ ulimit -Sn

1024

Set

All current limits are reported.

$ ulimit -a | grep file

-f: file size (blocks) unlimited

-c: core file size (blocks) 0

-n: file descriptors 1024

-x: file locks unlimited

# Set max per-user process limit:

$ ulimit -u <30>

ulimit -u <unlimited>

In this example, su to oracle user, enter:

# su - oracle

# set hard limit

$ ulimit -Hn <new_value>

# set soft limit

$ ulimit -Sn <new_value>

Process/Service Level

# view a process's limit

$ cat /proc/828/limits

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 15384 15384 processes

Max open files 65535 65535 files

Max locked memory 65536 65536 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 15384 15384 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

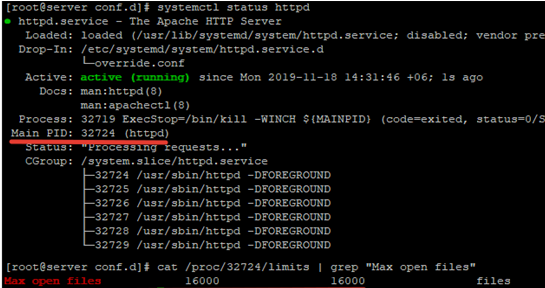

Increasing the Open File Descriptor Limit per Service

You can change the limit of open file descriptors for a specific service, rather than for the entire operating system. Let’s take apache as an example. Open the service settings using systemctl:

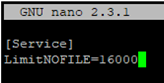

# systemctl edit httpd.service

Add the limits you want, e.g.:

[Service]

LimitNOFILE=16000

LimitNOFILESoft=16000

After making the changes, update the service configuration and restart it:

# systemctl daemon-reload# systemctl restart httpd.service

To make sure if the values have changed, get the service PID:

# systemctl status httpd.service

For example, the service PID is 3724:

# cat /proc/3724/limits | grep "Max open files”

Thus, you have changed the values for the maximum number of open files for a specific service.

How to Set Max Open Files Limit for Nginx & Apache?

When changing the limit on the number of open files for a web server, you also have to change the service configuration file. For example, specify/change the following directive value in the Nginx configuration file /etc/nginx/nginx.conf:

worker_rlimit_nofile 16000

When configuring Nginx on a highly loaded 8-core server with worker_connections 8192, you need to specify 819228 (vCPU) = 131072 in worker_rlimit_nofile.

Then restart Nginx.

For apache, create a directory:

# mkdir /lib/systemd/system/httpd.service.d/

Then create the limit_nofile.conf file:

# nano /lib/systemd/system/httpd.service.d/limit_nofile.conf

Add to it:

[Service]

LimitNOFILE=16000

Don’t forget to restart httpd.

Debug

Other option may be finding the culprit:

sudo lsof -n | cut -f1 -d' ' | uniq -c | sort | tail

For the last one you could see what files are open:

sudo lsof -n | grep socketfil

And kill the process if so desired

kill $pid

Reference

- https://ss64.com/bash/ulimit.html

- https://superuser.com/questions/433746/is-there-a-fix-for-the-too-many-open-files-in-system-error-on-os-x-10-7-1

- https://docs.oracle.com/cd/E19683-01/816-0210/6m6nb7mo3/index.html

- https://docs.mongodb.com/manual/reference/ulimit/

- https://www.cyberciti.biz/faq/linux-increase-the-maximum-number-of-open-files/

- http://woshub.com/too-many-open-files-error-linux/

- https://community.pivotal.io/s/article/Session-failures-with-Too-many-open-files?language=en_US