SETNX key value

Set key to hold string value if key does not exist. In that case, it is equal to SET. When key already holds a value, no operation is performed. SETNX is short for “SET if Not eXists”.

Return value

Integer reply, specifically:

1if the key was set0if the key was not set

Examples

redis> SETNX mykey "Hello"

(integer) 1

redis> SETNX mykey "World"

(integer) 0

redis> GET mykey

"Hello"

redis>

Redis的分布式锁实现

[错误的做法] - 利用 SETNX + EXPIRE 命令

Redis的SETNX命令,setnx key value,将key设置为value,当键不存在时,才能成功,若键存在,什么也不做,成功返回1,失败返回0 。 SETNX实际上就是SET IF NOT Exists的缩写

因为分布式锁还需要超时机制,所以我们利用expire命令来设置,所以利用setnx+expire命令的核心代码如下:

public boolean tryLock(String key,String requset,int timeout) {

Long result = jedis.setnx(key, requset);

// result = 1时,设置成功,否则设置失败

if (result == 1L) {

return jedis.expire(key, timeout) == 1L;

} else {

return false;

}

}

实际上上面的步骤是有问题的,setnx和expire是分开的两步操作,不具有原子性,如果执行完第一条指令后应用异常或者重启了,或其他任何导致EXPIRE 命令没有被执行情况(还比如,设置锁超时时,出现了网络问题),锁将没有超时时间,最终出现死锁。

一种改善方案就是使用Lua脚本来保证原子性(包含setnx和expire两条指令)。

[正确但麻烦的做法] - 使用 Lua 脚本(包含 SETNX 和 EXPIRE 两条指令)

public boolean tryLock_with_lua(String key, String UniqueId, int seconds) {

String lua_scripts = "if redis.call('setnx',KEYS[1],ARGV[1]) == 1 then" +

"redis.call('expire',KEYS[1],ARGV[2]) return 1 else return 0 end";

List<String> keys = new ArrayList<>();

List<String> values = new ArrayList<>();

keys.add(key);

values.add(UniqueId);

values.add(String.valueOf(seconds));

Object result = jedis.eval(lua_scripts, keys, values);

//判断是否成功

return result.equals(1L);

}

[变通的做法] - SETNX + timstamp

SETNX lock.foo <current Unix time + lock timeout + 1>

If SETNX returns 1 the client acquired the lock, setting the lock.foo key to the Unix time at which the lock should no longer be considered valid. The client will later use DEL lock.foo in order to release the lock.

If SETNX returns 0 the key is already locked by some other client. We can either return to the caller if it’s a non blocking lock, or enter a loop retrying to hold the lock until we succeed or some kind of timeout expires.

Handling deadlocks

In the above locking algorithm there is a problem: what happens if a client fails, crashes, or is otherwise not able to release the lock? It’s possible to detect this condition because the lock key contains a UNIX timestamp. If such a timestamp is equal to the current Unix time the lock is no longer valid.

When this happens we can’t just call DEL against the key to remove the lock and then try to issue a SETNX, as there is a race condition here, when multiple clients detected an expired lock and are trying to release it.

- C1 and C2 read

lock.footo check the timestamp, because they both received0after executing SETNX, as the lock is still held by C3 that crashed after holding the lock. - C1 sends

DEL lock.foo - C1 sends

SETNX lock.fooand it succeeds - C2 sends

DEL lock.foo - C2 sends

SETNX lock.fooand it succeeds - ERROR: both C1 and C2 acquired the lock because of the race condition.

Fortunately, it’s possible to avoid this issue using the following algorithm. Let’s see how C4, our sane client, uses the good algorithm:

- C4 sends

SETNX lock.fooin order to acquire the lock - The crashed client C3 still holds it, so Redis will reply with

0to C4. - C4 sends

GET lock.footo check if the lock expired. If it is not, it will sleep for some time and retry from the start. - Instead, if the lock is expired because the Unix time at

lock.foois older than the current Unix time, C4 tries to perform:

GETSET lock.foo <current Unix timestamp + lock timeout + 1>

- Because of the GETSET semantic, C4 can check if the old value stored at

keyis still an expired timestamp. If it is, the lock was acquired by C4. - If another client, for instance C5, was faster than C4 and acquired the lock with the GETSET operation, the C4 GETSET operation will return a non expired timestamp. C4 will simply restart from the first step. Note that even if C4 set the key a bit a few seconds in the future this is not a problem.

In order to make this locking algorithm more robust, a client holding a lock should always check the timeout didn’t expire before unlocking the key with DEL because client failures can be complex, not just crashing but also blocking a lot of time against some operations and trying to issue DEL after a lot of time (when the LOCK is already held by another client).

[正确做法,但仍有potential issue] - 使用 set key value [EX seconds][PX milliseconds] [NX|XX] 命令

Redis在 2.6.12 版本开始,为 SET 命令增加一系列选项:

SET key value[EX seconds][PX milliseconds][NX|XX]

- EX seconds: 设定过期时间,单位为秒

- PX milliseconds: 设定过期时间,单位为毫秒

- NX: 仅当key不存在时设置值

- XX: 仅当key存在时设置值

set命令的nx选项,就等同于setnx命令,代码过程如下:

public boolean tryLock_with_set(String key, String UniqueId, int seconds) {

return "OK".equals(jedis.set(key, UniqueId, "NX", "EX", seconds));

}

value必须要具有唯一性,我们可以用UUID来做,设置随机字符串保证唯一性,至于为什么要保证唯一性?假如value不是随机字符串,而是一个固定值,那么就可能存在下面的问题:

- 客户端1获取锁成功

- 客户端1在某个操作上阻塞了太长时间

- 设置的key过期了,锁自动释放了

- 客户端2获取到了对应同一个资源的锁

- 客户端1从阻塞中恢复过来,因为value值一样,所以执行释放锁操作时就会释放掉客户端2持有的锁,这样就会造成问题

所以,在释放锁时,我们需要对value进行验证。

Potential Issue

When Master Node Goes Wrong

使用 set key value [EX seconds][PX milliseconds][NX|XX] 命令看上去很OK,实际上,如果在Redis集群(同时存在master node 和 slave node)中使用,仍然可能会出现问题。

比如说A客户端在Redis的master节点上拿到了锁,但是这个加锁的key还没有同步到slave节点。这时,发生了master故障,因而发生故障转移,一个slave节点升级为master节点,B客户端也可以获取同个key的锁,但客户端A也已经拿到锁了,这就导致多个客户端都拿到锁。

正因为如此,Redis作者antirez基于分布式环境下提出了一种更高级的分布式锁的实现方式:Redlock。

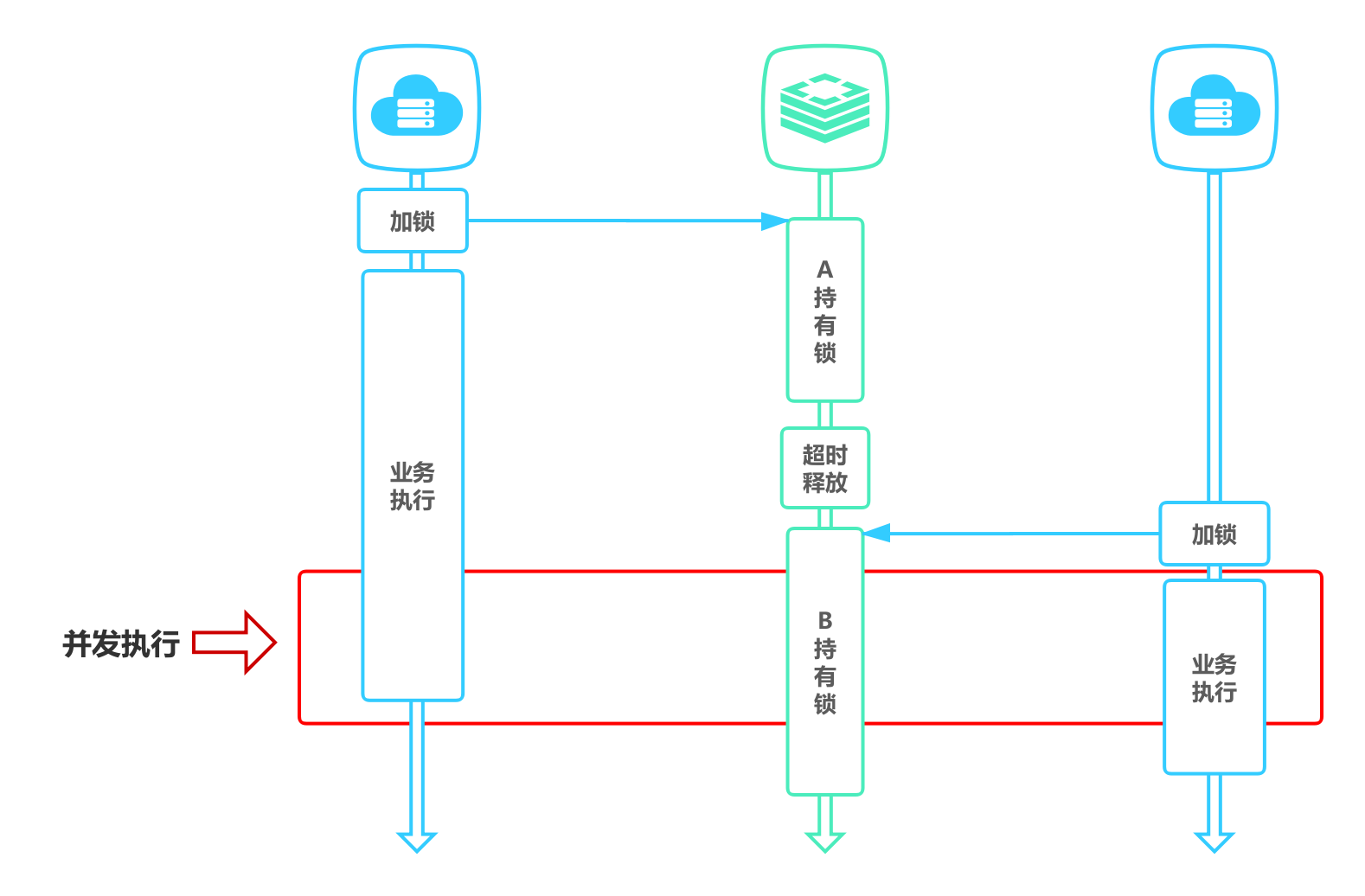

When Timeout Hit

带有超时特性的锁满足了避免死锁的性质,但是这种auto release的机制的却很有可能破坏锁的互斥性质。

举个例子,比如当进程A获得了锁,并设置锁的超期时间为10s,进程A由于处理任务花费的时间较长,10s后任务还没处理完,但是此时锁已经过期被释放了,进程B重新获得了锁(不要忘了,锁实际上是对资源独占的一种申明)。这个时候由于进程A没有主动释放锁,进程B又获得了锁,对A、B来讲,他们都认为自己独占了资源,当他们按照独占资源的想法去操作资源的时候就可能会导致冲突。

Solution

A、B 两个线程发生并发显然是不被允许的,一般有两种方式解决该问题:

- 将过期时间设置足够长(从实践来说,如果算“足够长”非常tricky),确保代码逻辑在锁释放之前能够执行完成。

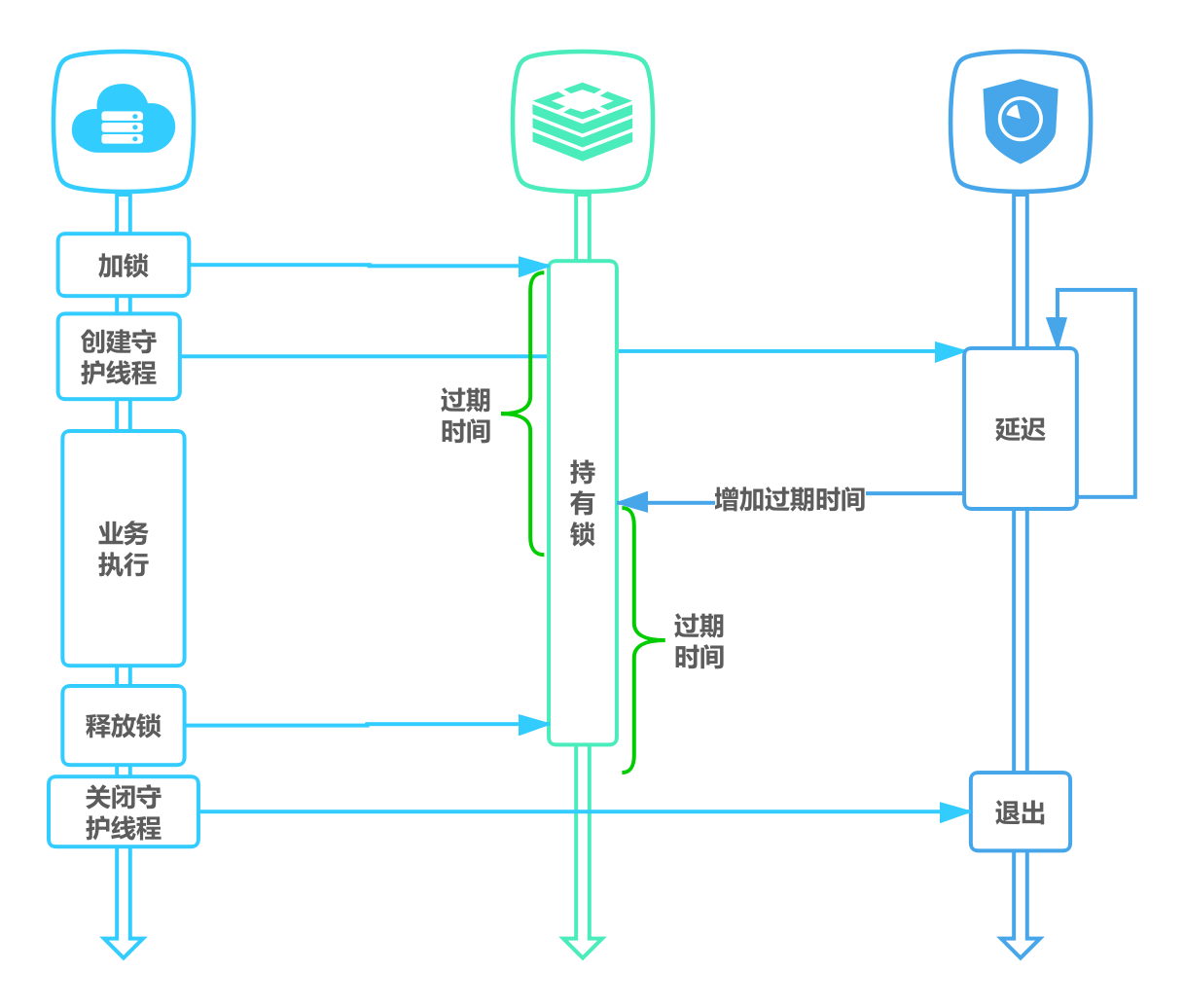

- 为获取锁的线程增加守护线程,为将要过期但未释放的锁增加有效时间。

释放锁的实现

释放锁时需要验证value值,也就是说我们在获取锁的时候需要设置一个value(而且这个value要具有唯一性),不能直接用del key这种粗暴的方式。因为如果这样,任何客户端都可以直接del key来进行解锁(而解锁操作本应当是由 locker owner 来进行的)。

所以解锁时,我们需要判断锁是否是自己的,基于value值来判断,代码如下:

public boolean releaseLock_with_lua(String key,String value) {

String luaScript = "if redis.call('get',KEYS[1]) == ARGV[1] then " +

"return redis.call('del',KEYS[1]) else return 0 end";

return jedis.eval(luaScript, Collections.singletonList(key), Collections.singletonList(value)).equals(1L);

}

这里使用Lua脚本的方式,以保证原子性。

Redlock 算法

Redlock is an algorithm implementing distributed locks with Redis

We are going to model our design with just three properties that, from our point of view, are the minimum guarantees needed to use distributed locks in an effective way.

- Safety property: Mutual exclusion. At any given moment, only one client can hold a lock.

- Liveness property A: Deadlock free. Eventually it is always possible to acquire a lock, even if the client that locked a resource crashes or gets partitioned.

- Liveness property B: Fault tolerance. As long as the majority of Redis nodes are up, clients are able to acquire and release locks.

Why Redlock

To understand what we want to improve, let’s analyze the current state of affairs with most Redis-based distributed lock libraries.

The simplest way to use Redis to lock a resource is to create a key in an instance. The key is usually created with a limited time to live, using the Redis expires feature, so that eventually it will get released (property 2 in our list). When the client needs to release the resource, it deletes the key.

Superficially this works well, but there is a problem: this is a single point of failure in our architecture. What happens if the Redis master goes down? Well, let’s add a slave! And use it if the master is unavailable. This is unfortunately not viable. By doing so we can’t implement our safety property of mutual exclusion, because Redis replication is asynchronous.

There is an obvious race condition with this model:

- Client A acquires the lock in the master.

- The master crashes before the write to the key is transmitted to the slave.

- The slave gets promoted to master.

- Client B acquires the lock to the same resource A already holds a lock for. SAFETY VIOLATION!

Sometimes it is perfectly fine that under special circumstances, like during a failure, multiple clients can hold the lock at the same time. If this is the case, you can use your replication based solution. Otherwise we suggest to implement the solution described in this document.

Ref

Arguement of Redlock - https://martin.kleppmann.com/2016/02/08/how-to-do-distributed-locking.html

Arguement of Redlock

https://martin.kleppmann.com/2016/02/08/how-to-do-distributed-locking.html

Redisson 实现

Reference

- https://redis.io/commands/set

- https://redis.io/commands/setnx

- https://redis.io/topics/distlock

- https://redis.io/docs/manual/patterns/distributed-locks/

- https://juejin.im/post/6844903830442737671

- https://xiaomi-info.github.io/2019/12/17/redis-distributed-lock/

- https://zhuanlan.zhihu.com/p/100140241