Background

If transactions are executed serially, i.e., sequentially with no overlap in time, no transaction concurrency exists. However, if concurrent transactions with interleaving operations are allowed in an uncontrolled manner, some unexpected, undesirable results may occur, such as:

- Lost update problem

- The dirty read (temporary update) problem

- dirty write

- Non-repeatable read / Read Skew

- Phantom reads

- Write Skew

All but the SERIALIZABLE level are subject to data anomalies (phenomena) that might occur according to the following pattern:

| Isolation Level | Lost updates | Dirty read | Non-repeatable read | Phantom read |

|---|---|---|---|---|

| READ_UNCOMMITTED | prevented | allowed | allowed | allowed |

| READ_COMMITTED | prevented | prevented | allowed | allowed |

| REPEATABLE_READ | prevented | prevented | prevented | allowed |

| SERIALIZABLE | prevented | prevented | prevented | prevented |

以上顺序是从松到严(即从更多不一致到更少不一致)

Lost Update

A lost update occurs when two different transactions are trying to update the same column on the same row within a database at the same time.

Example 1

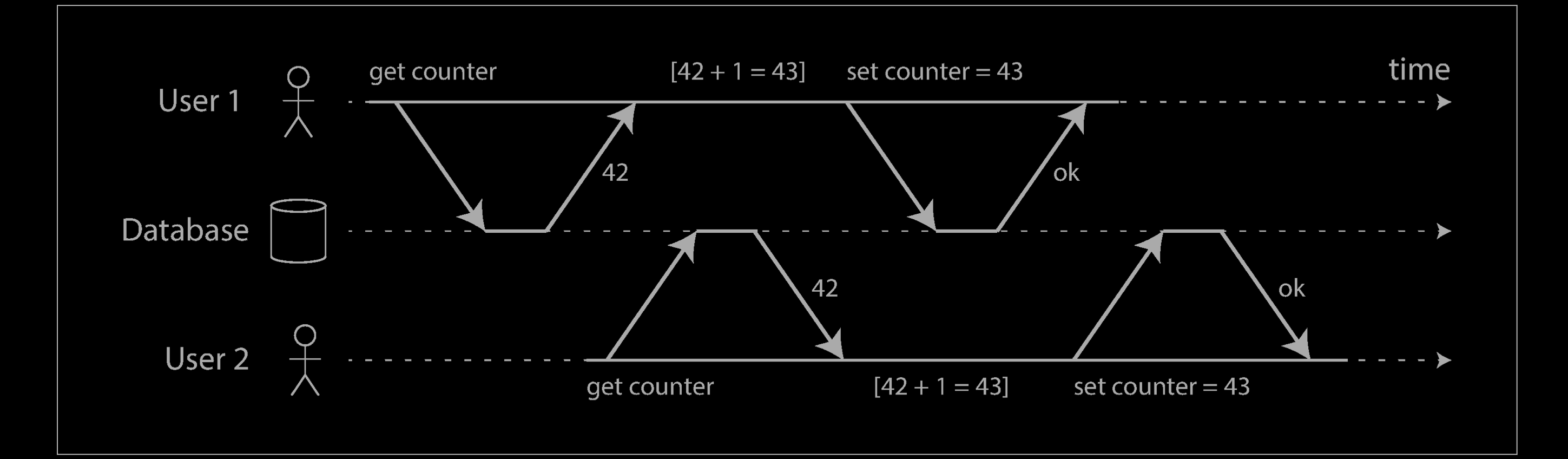

The counter should have increased from 42 to 44, because two increments happened, but it actually only went to 43 because of the race condition.

The lost update problem can occur if an application reads some value from the data‐base, modifies it, and writes back the modified value (a read-modify-write cycle). If two transactions do this concurrently, one of the modifications can be lost, because the second write does not include the first modification. (We sometimes say that the later write clobbers the earlier write.) This pattern occurs in various different scenarios:

- Incrementing a counter or updating an account balance (requires reading the current value, calculating the new value, and writing back the updated value)

- Making a local change to a complex value, e.g., adding an element to a list within a JSON document (requires parsing the document, making the change, and writing back the modified document)

- Two users editing a wiki page at the same time, where each user saves their changes by sending the entire page contents to the server, overwriting whatever is currently in the database

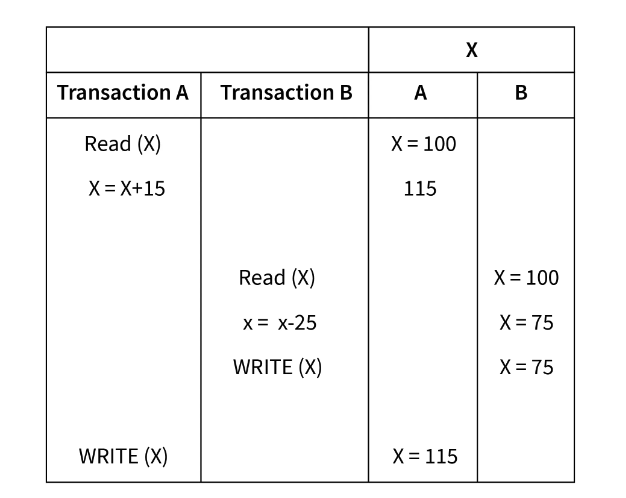

Example 2

How to prevent Lost Update

Atomic write operations

Many databases provide atomic update operations, which remove the need to implement read-modify-write cycles in application code. They are usually the best solution if your code can be expressed in terms of those operations. For example, the following instruction is concurrency-safe in most relational databases:

UPDATE counters SET value = value + 1 WHERE key = 'foo';

Similarly, document databases such as MongoDB provide atomic operations for making local modifications to a part of a JSON document, and Redis provides atomic operations for modifying data structures such as priority queues. Not all writes can easily be expressed in terms of atomic operations—for example, updates to a wiki page involve arbitrary text editing viii —but in situations where atomic operations can be used, they are usually the best choice.

Atomic operations are usually implemented by taking an exclusive lock on the object when it is read so that no other transaction can read it until the update has been applied. This technique is sometimes known as cursor stability. Another option is to simply force all atomic operations to be executed on a single thread.

Unfortunately, object-relational mapping frameworks make it easy to accidentally write code that performs unsafe read-modify-write cycles instead of using atomic operations provided by the database. That’s not a problem if you know what you are doing, but it is potentially a source of subtle bugs that are difficult to find by testing.

Explicit locking

Another option for preventing lost updates, if the database’s built-in atomic operations don’t provide the necessary functionality, is for the application to explicitly lock objects that are going to be updated. Then the application can perform a read-modify-write cycle, and if any other transaction tries to concurrently read the same object, it is forced to wait until the first read-modify-write cycle has completed.

For example, consider a multiplayer game in which several players can move the same figure concurrently. In this case, an atomic operation may not be sufficient, because the application also needs to ensure that a player’s move abides by the rules of the game, which involves some logic that you cannot sensibly implement as a database query. Instead, you may use a lock to prevent two players from concurrently moving the same piece:

BEGIN TRANSACTION;

SELECT * FROM figures

WHERE name = 'robot' AND game_id = 222 FOR UPDATE;

-- Check whether move is valid, then update the position -- of the piece that was returned by the previous SELECT. UPDATE figures SET position = 'c4' WHERE id = 1234;

COMMIT;

This works, but to get it right, you need to carefully think about your application logic. It’s easy to forget to add a necessary lock somewhere in the code, and thus introduce a race condition.

Dirty Read

A dirty read happens when a transaction (name as Transaction A) is allowed to read the uncommitted changes of some other concurrent transaction (name as Transaction B) .

This is known as a dirty read scenario because there is always the possibility that the Transaction B may rollback the change, resulting in the Transaction A having read an invalid data.

Taking a business decision on a value that has not been committed is risky because uncommitted changes might get rolled back.

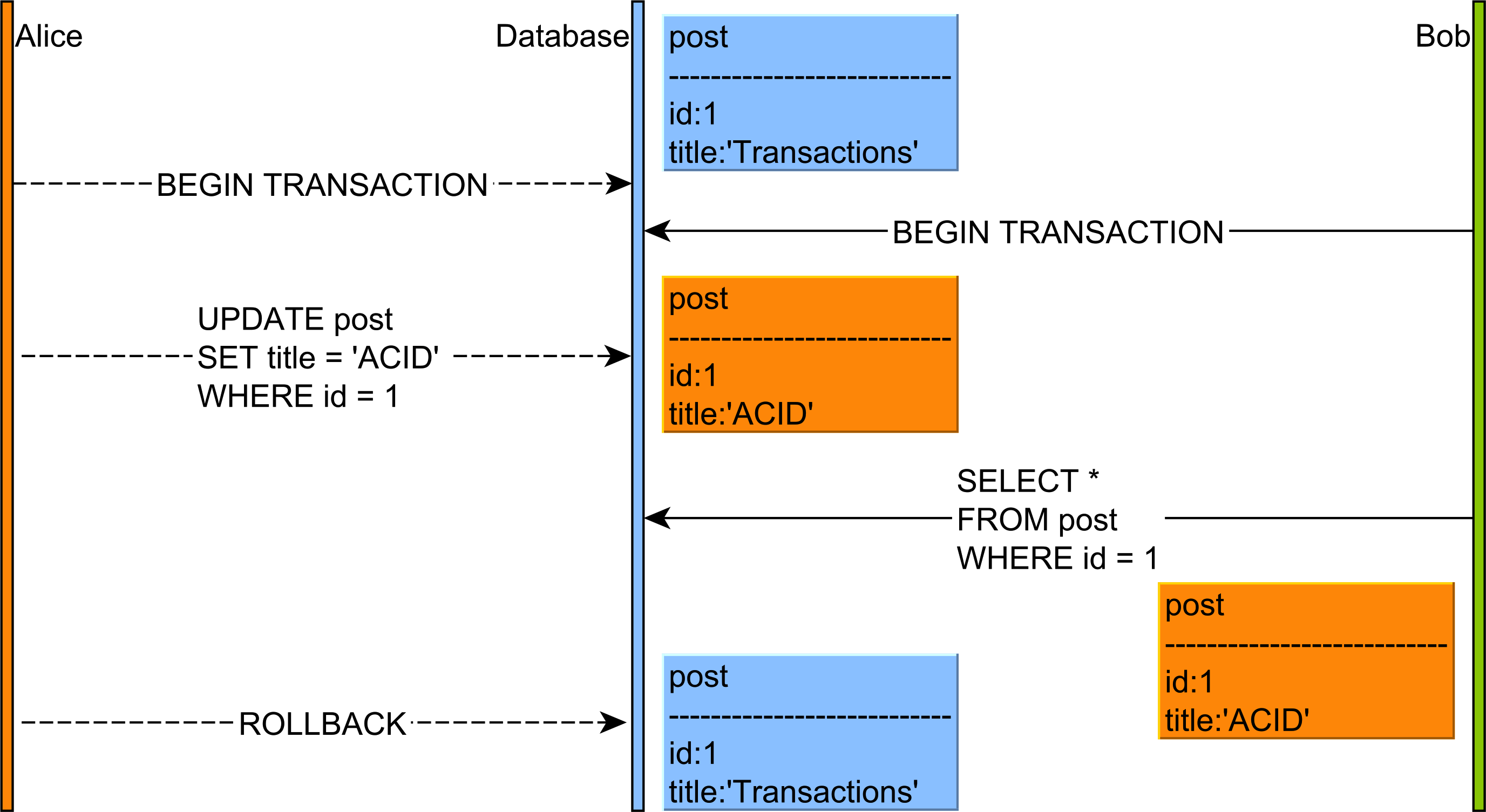

In the diagram above, the flow of statements goes like this:

- Alice and Bob start two database transactions.

- Alice modifies the title of a given

postrecord. - Bob reads the uncommitted

postrecord. - If Alice commits her transaction, everything is fine. But if Alice rolls back, then Bob will see a record version which no longer exists in the database transaction log.

This anomaly is only permitted by the Read Uncommitted isolation level, and, because of the impact on data integrity, most database systems offer a higher default isolation level.

Dirty Write

If the earlier write is part of a transaction that has not yet committed, and the later write overwrites an uncommitted value. This is called a dirty write.

Transactions running at the read committed isolation level will prevent dirty writes, usually by delaying the second write until the first write’s transaction has committed or aborted.

By preventing dirty writes, this isolation level avoids some kinds of concurrency problems:

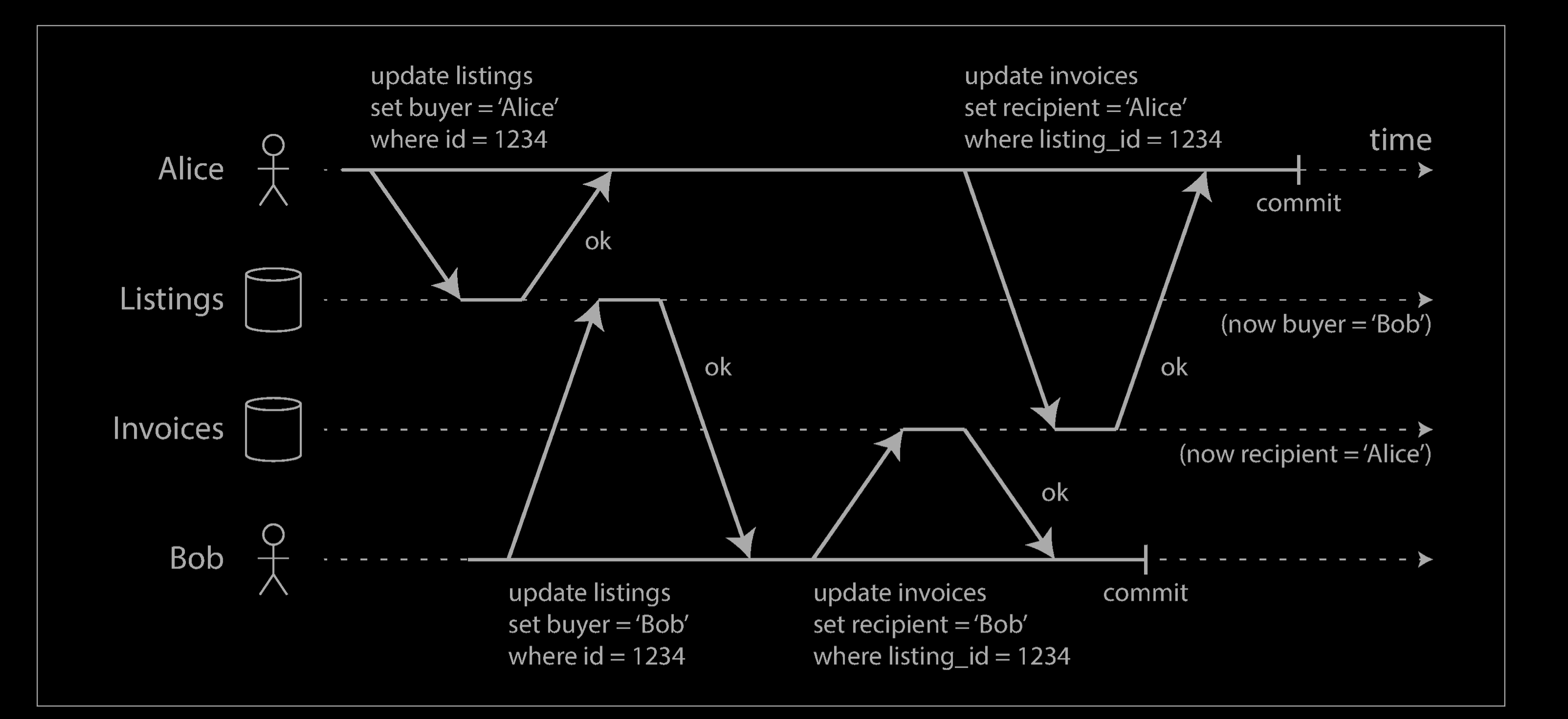

- If transactions update multiple objects, dirty writes can lead to a bad outcome. For example, consider the example below, which illustrates a used car sales website on which two people, Alice and Bob, are simultaneously trying to buy the same car. Buying a car requires two database writes: the listing on the website needs to be updated to reflect the buyer, and the sales invoice needs to be sent to the buyer. In the case of this example, the sale is awarded to Bob (because he performs the winning update to the listings table), but the invoice is sent to Alice (because she performs the winning update to the invoices table). Read committed prevents such mishaps.

Non-repeatable Read / Read Skew

Non Repeatable Reads happen when in a same transaction same query yields to a different result. This occurs when one transaction repeatedly retrieves the data, while a difference transactions alters the underlying data. This causes the different or non-repeatable results to be read by the first transaction.

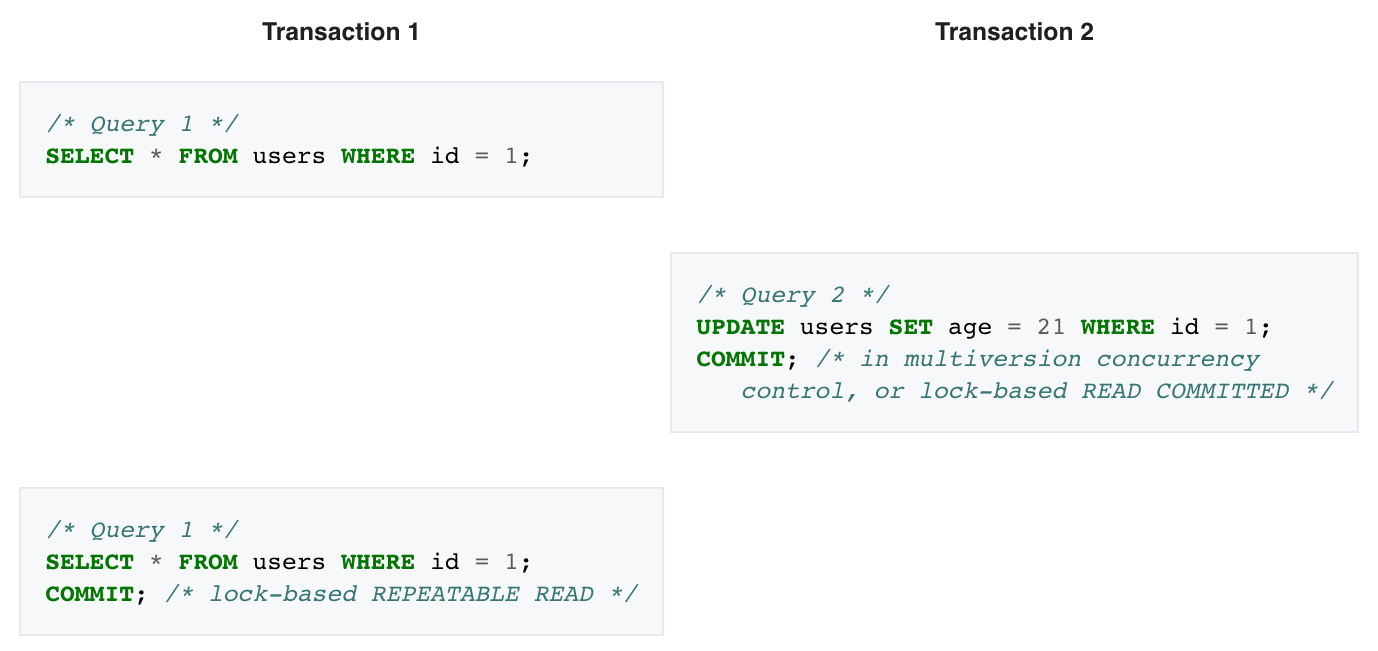

Example 1

In this example, Transaction 2 commits successfully, which means that its changes to the row with id 1 should become visible. However, Transaction 1 has already seen a different value for age in that row.

Non-repeatable reads phenomenon may occur in a lock-based concurrency control method when read locks are not acquired when performing a SELECT, or when the acquired locks on affected rows are released as soon as the SELECT operation is performed.

Under the multiversion concurrency control method, non-repeatable reads may occur when the requirement that a transaction affected by a commit conflict must roll back is relaxed.

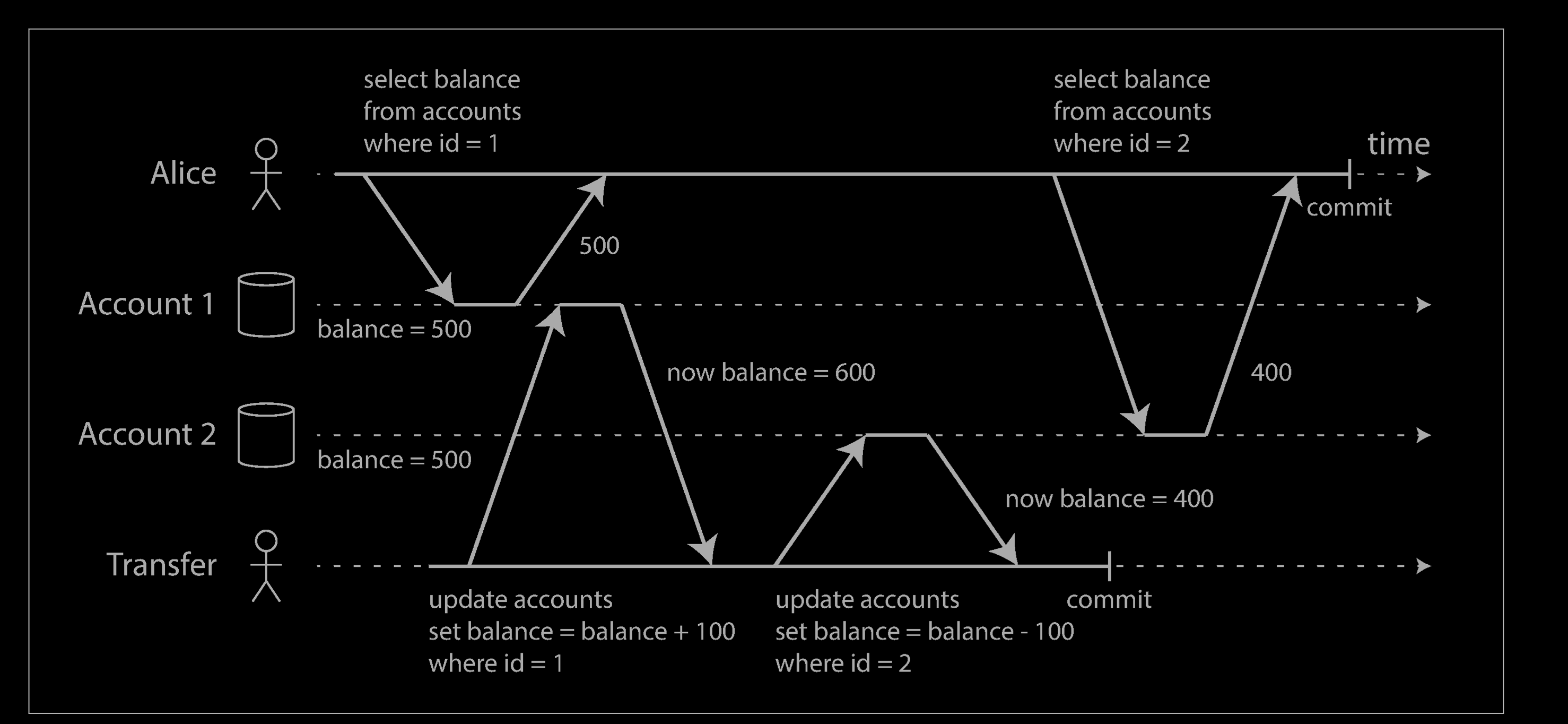

Example 2

Say Alice has $1,000 of savings at a bank, split across two accounts with $500 each. Now a transaction transfers $100 from one of her accounts to the other. If she is unlucky enough to look at her list of account balances in the same moment as that transaction is being processed, she may see one account balance at a time before the incoming payment has arrived (with a balance of $500), and the other account after the outgoing transfer has been made (the new balance being $400). To Alice it now appears as though she only has a total of $900 in her accounts—it seems that $100 has vanished into thin air.

This anomaly is called a nonrepeatable read or read skew: if Alice were to read the balance of account 1 again at the end of the transaction, she would see a different value ($600) than she saw in her previous query. Read skew is considered acceptable under read committed isolation: the account balances that Alice saw were indeed committed at the time when she read them.

Phantom Read

A transaction reads objects that match some search condition. Another client makes a write that affects the results of that search. Snapshot isolation prevents straightforward phantom reads, but phantoms in the context of write skew require special treatment, such as index-range locks.

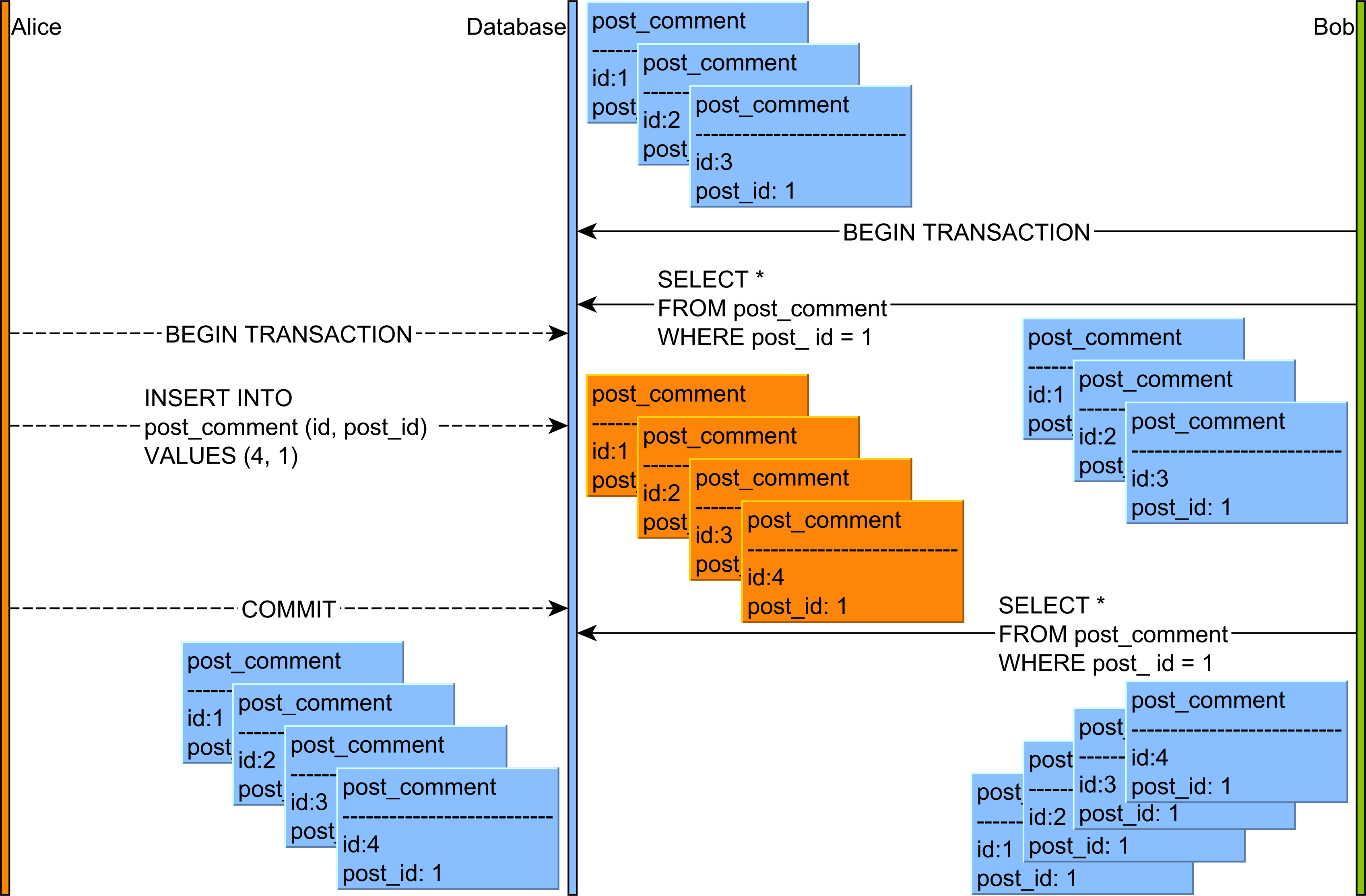

Example 1

The phantom reads anomaly is a special case of Non-repeatable reads when Transaction 1 repeats a ranged SELECT ... WHERE query and, between both operations, Transaction 2 creates new rows (i.e., INSERT) or deletes existing rows (i.e., DELETE) (in the target table) which fulfill that WHERE clause.

In the diagram above, the flow of statements goes like this:

- Alice and Bob start two database transactions.

- Bob’s reads all the

post_commentrecords associated with thepostrow with the identifier value of 1. - Alice adds a new

post_commentrecord which is associated with thepostrow having the identifier value of 1. - Alice commits her database transaction.

- If Bob’s re-reads the

post_commentrecords having thepost_idcolumn value equal to 1, he will observe a different version of this result set.

How to aviod Phantom Reads

If the highest level of isolation (i.e., SERIALIZABLE isolation level) were maintained, the same set of rows should be returned both times, and indeed that is what is mandated to occur in a database operating at the SERIALIZABLE isolation level. However, at the lesser isolation levels, a different set of rows may be returned the second time.

In the SERIALIZABLE isolation mode, Query 1 would result in all records with post_id =1 being locked, thus Query 2 would block until the first transaction was committed. In REPEATABLE READ mode, the range would not be locked, allowing the record to be inserted and the second execution of Query 1 to include the new row in its results.

he 2PL-based Serializable isolation prevents Phantom Reads through the use of predicate locking while MVCC (Multi-Version Concurrency Control) database engines address the Phantom Read anomaly by returning consistent snapshots.

However, a concurrent transaction can still modify the range of records that was read previously. Even if the MVCC database engine introspects the transaction schedule, the outcome is not always the same as a 2PL-based implementation. One such example is when the second transaction issues an insert without reading the same range of records as the first transaction. In this particular use case, some MVCC database engines will not end up rolling back the first transaction.

Write Skew

A transaction reads something, makes a decision based on the value it saw, and writes the decision to the database. However, by the time the write is made, the premise of the decision is no longer true. Only serializable isolation prevents this anomaly.

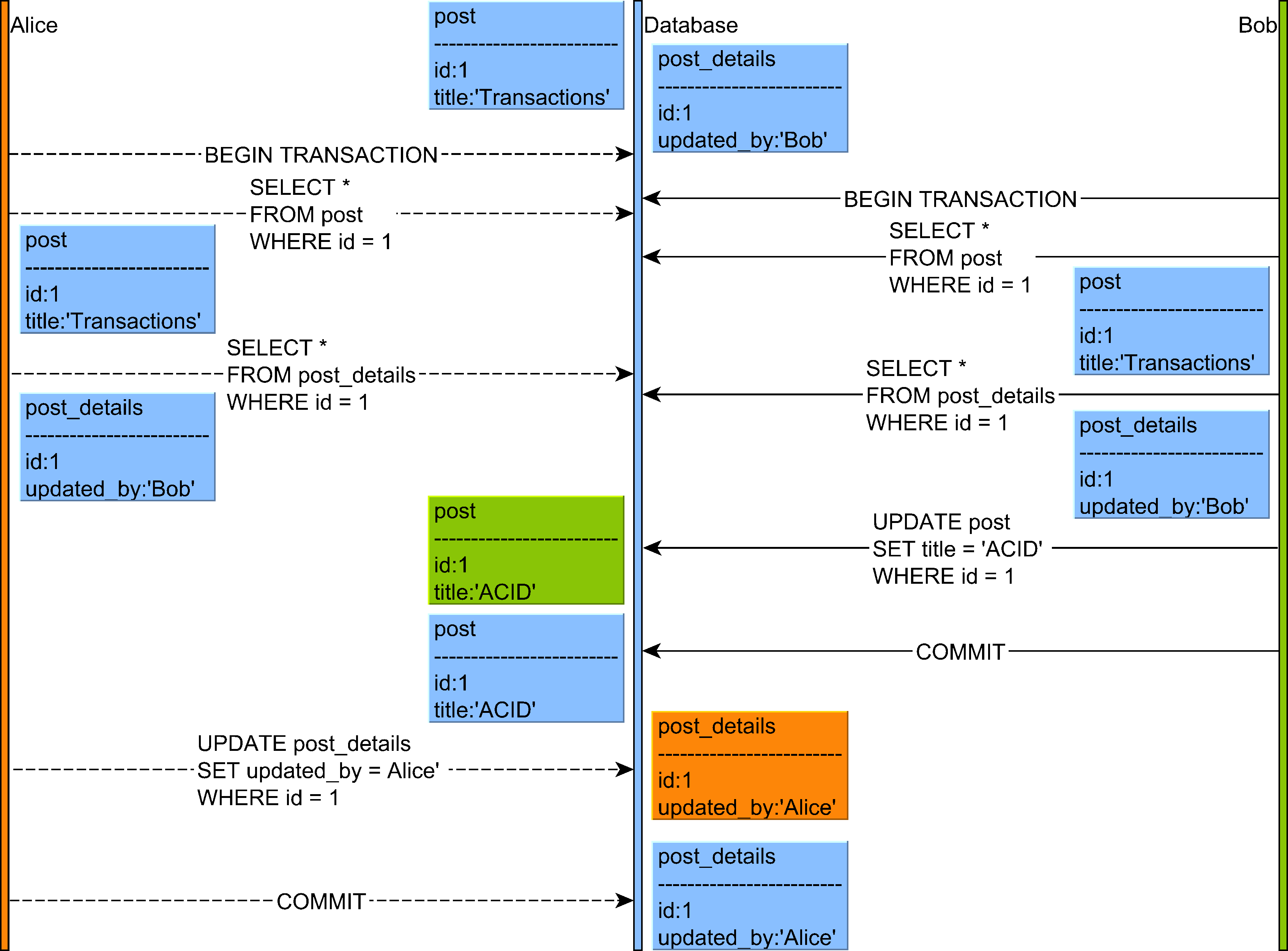

Example 1

- Both Alice and Bob select the Post and the PostDetails entities.

- Bob modifies the Post title, but, since the PostDetails is already marked as updated by Bob, the dirty checking mechanism will skip updating the PostDetails entity, therefore preventing a redundant UPDATE statement.

- Alice wants to update the Post entity, but the entity already has the same value as the one she wants to apply so only the PostDetails record will mark that the latest change is the one proposed by Alice.

If write skew is permitted, Alice and Bob disjoint writes will proceed, therefore breaking the guarantee that Post and PostDetails should always be in sync.

Example 2

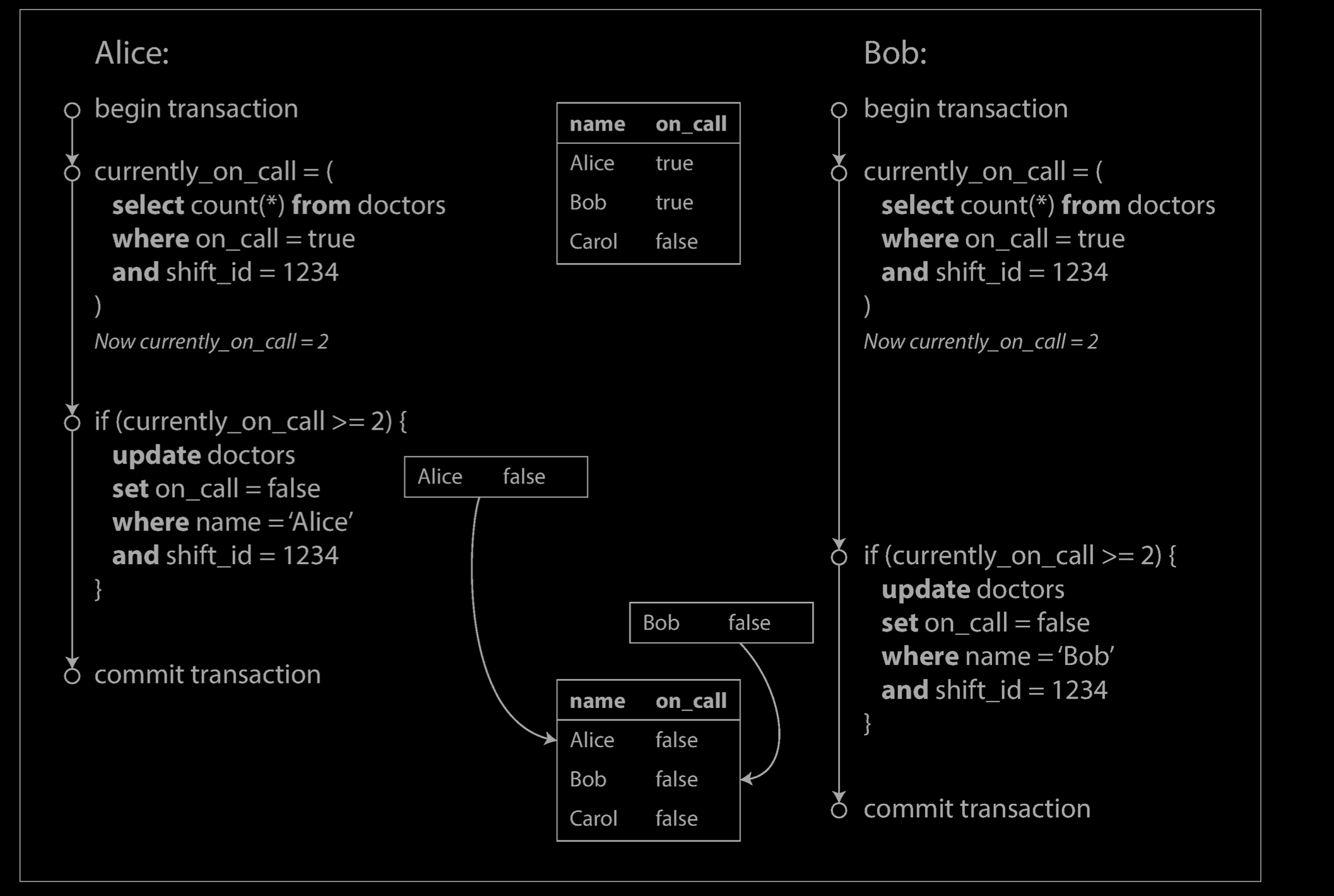

To begin, imagine this example: you are writing an application for doctors to manage their on-call shifts at a hospital. The hospital usually tries to have several doctors on call at any one time, but it absolutely must have at least one doctor on call. Doctors can give up their shifts (e.g., if they are sick themselves), provided that at least one colleague remains on call in that shift.

Now imagine that Alice and Bob are the two on-call doctors for a particular shift. Both are feeling unwell, so they both decide to request leave. Unfortunately, they happen to click the button to go off call at approximately the same time. What happens next is illustrated below.

In each transaction, your application first checks that two or more doctors are currently on call; if yes, it assumes it’s safe for one doctor to go off call. Since the database is using snapshot isolation, both checks return 2, so both transactions proceed to the next stage. Alice updates her own record to take herself off call, and Bob updates his own record likewise. Both transactions commit, and now no doctor is on call. Your requirement of having at least one doctor on call has been violated.

Reference

- https://dev.mysql.com/doc/refman/8.0/en/glossary.html#glos_dirty_read

- Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems

- A beginner’s guide to database locking and the lost update phenomena - https://vladmihalcea.com/a-beginners-guide-to-database-locking-and-the-lost-update-phenomena/

- The Lost Update Problem in Concurrent Transactions - https://codingsight.com/the-lost-update-problem-in-concurrent-transactions/

- A beginner’s guide to Dirty Read anomaly - https://vladmihalcea.com/dirty-read/

- A beginner’s guide to Phantom Read anomaly - https://vladmihalcea.com/phantom-read/

- A beginner’s guide to read and write skew phenomena - https://vladmihalcea.com/a-beginners-guide-to-read-and-write-skew-phenomena/

- Wikipedia Isolation - https://en.wikipedia.org/wiki/Isolation_(database_systems)